MG-RAST user manual¶

Motivation¶

MG-RAST provides Science as a Service for environmental DNA (“metagenomic sequences”) at https://mg-rast.org.

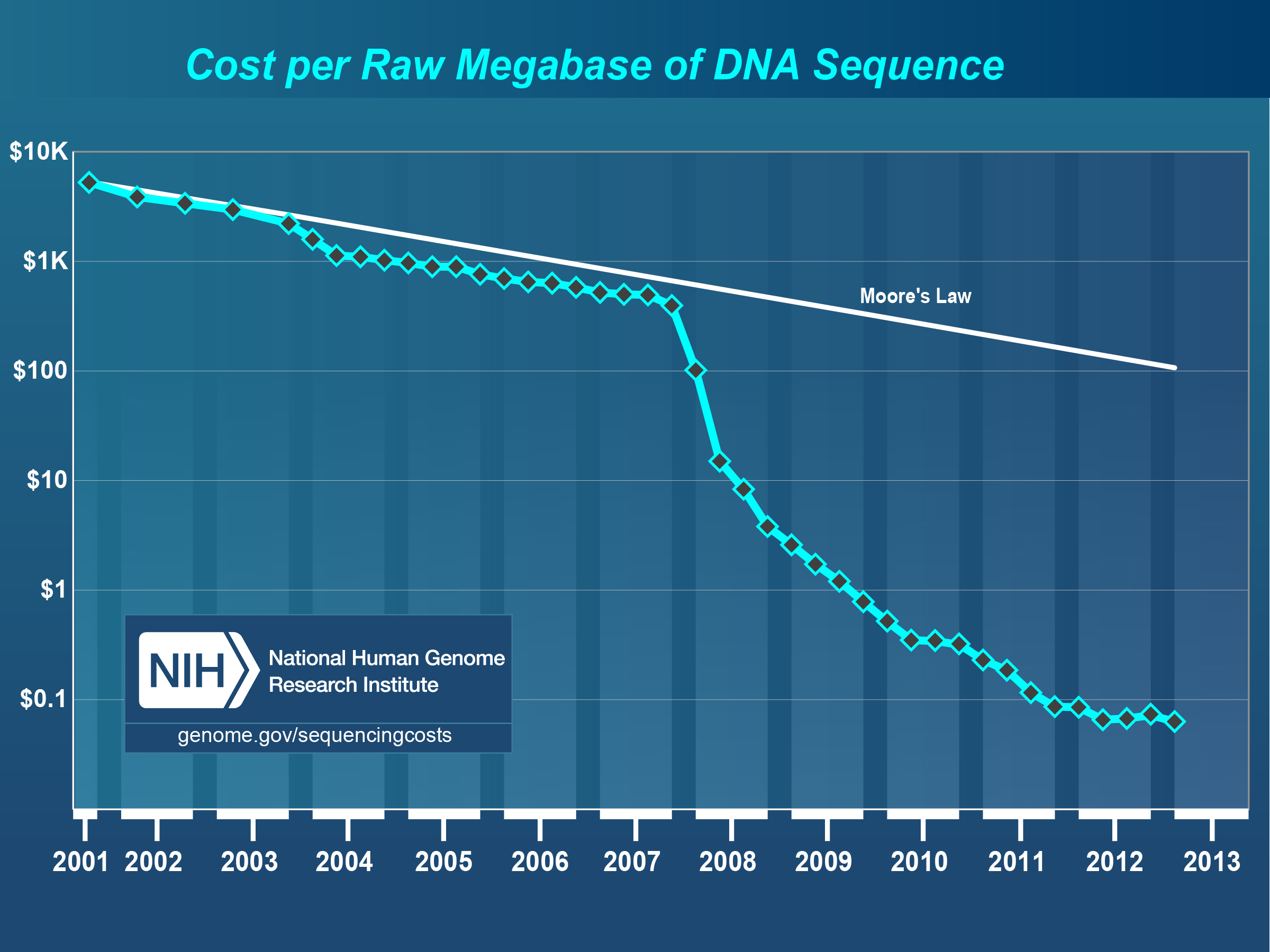

The National Human Genome Research Institute (NHGRI), a division of the National Institutes of Health, publishes information (see Figure [fig:cost_per_megabase]) describing the development of computing costs and DNA sequencing costs over time (Institute 2012). The dramatic gap between the shrinking costs of sequencing and the more or less stable costs of computing is a major challenge for biomedical researchers trying to use next-generation DNA sequencing platforms to obtain information on microbial communities. Wilkening et al. (Wilkening et al. 2009) provide a real currency cost for the analysis of 100 gigabasepairs of DNA sequence data using BLASTX on Amazon’s EC2 service: $300,000. [1] A more recent study by University of Maryland researchers (Angiuoli et al. 2011) estimates the computation for a terabase of DNA shotgun data using their CLOVR metagenome analysis pipeline at over $5 million per terabase.

Chart showing shrinking cost for DNA sequencing. This comparison with Moore’s law roughly describing the development of computing costs highlights the growing gap between sequence data and the available analysis resources. Source: NHGRI (Institute 2012)

Nevertheless, the growth in data enabled by next-generation sequencing platforms also provides an exciting opportunity for studying microbial communities: 99% of the microbes in which have not yet been cultured (Riesenfeld, Schloss, and Handelsman 2004). Cultivation-free methods (often summarized as metagenomics) offer novel insights into the biology of the vast majority of life on Earth (Thomas, Gilbert, and Meyer 2012).

Several types of studies use DNA for environmental analyses:

- Environmental clone libraries (functional metagenomics): use of Sanger sequencing (frequently) instead of more cost-efficient next-generation sequencing

- Amplicon metagenomics (single gene studies, 16s rDNA): next-generation sequencing of PCR amplified ribosomal genes providing a single reference gene–based view of microbial community ecology

- Shotgun metagenomics: use of next-generation technology applied directly to environmental samples

- Metatranscriptomics: use of cDNA transcribed from mRNA

Each of these methods has strengths and weaknesses (see (Thomas, Gilbert, and Meyer 2012)), as do the various sequencing technologies (see (Loman et al. 2012)).

- Who is out there? Identifying the composition of a microbial community either by using amplicon data for single genes or by deriving community composition from shotgun metagenomic data using sequence similarities.

- What are they doing? Using shotgun data (or metatranscriptomic data) to derive the functional complement of a microbial community using similarity searches against a number of databases.

- Who is doing what? Based on sequence similarity searches, identifying the organisms encoding specific functions.

The system supports the analysis of the prokaryotic content of samples, analysis of viruses and eukaryotic sequences is not currently supported, due to software limitations.

MG-RAST users can upload raw sequence data in fastq, fasta and sff format; the sequences will be normalized (quality controlled) and processed and summaries automatically generated. The server provides several methods to access the different data types, including phylogenetic and metabolic reconstructions, and the ability to compare the metabolism and annotations of one or more metagenomes, individually or in groups. Access to the data is password protected unless the owner has made it public, and all data generated by the automated pipeline is available for download in variety of common formats.

Brief description¶

The MG-RAST pipeline performs quality control, protein prediction, clustering and similarity-based annotation on nucleic acid sequence datasets using a number of bioinformatics tools (see Section 13.2.1. MG-RAST was built to analyze large shotgun metagenomic data sets ranging in size from megabases to terabases. We also support amplicon (16S, 18S, and ITS) sequence datasets and metatranscriptome (RNA-seq) sequence datasets. The current MG-RAST pipeline is not capable of predicting coding regions from eukaryotes and thus will be of limited use for eukaryotic shotgun metagenomes and/or the eukaryotic subsets of shotgun metagenomes.

Data on MG-RAST is private to the submitting user unless shared with other users or made public by the user. We strongly encourage the eventual release of data and require metadata (“data describing data”) for data sharing or publication. Data submitted with metadata will be given priority for the computational queue.

You need to provide (raw or assembled) nucleotide sequence data and sample descriptions (“metadata”). The system accepts sequence data in FASTA, FASTQ and SFF format and metadata in the form or GSC ( http://gensc.org/ ) standard compliant checklists (see Yilmaz et al, Nature Biotech, 2011). Uploads can be put in the system via either the web interface or a command line tool. Data and metadata are validated after upload.

You must choose quality control filtering options at the time you submit your job. MG-RAST provides several options for quality control (QC) filtering for nucleotide sequence data, including removal of artificial duplicate reads, quality-based read trimming, length-based read trimming, and screening for DNA of model organisms (or humans). These filters are applied before the data are submitted for annotation.

The MG-RAST pipeline assigns an accession number and puts the data in a queue for computation. The similarity search step is computationally expensive. Small jobs can complete as fast as hours, while large jobs can spend a week waiting in line for computational resources.

MG-RAST performs a protein similarity search between predicted proteins and database proteins (for shotgun) and a nucleic-acid similarity search (for reads similar to 16S and 18S sequences).

MG-RAST presents the annotations via the tools on the analysis page which prepare, compare, display, and export the results on the website. The download page offers the input data, data at intermediate stages of filtering, the similarity search output, and summary tables of functions and organisms detected.

MG-RAST can compare thousands of data sets run through a consistent annotation pipeline. We also provide a means to view annotations in multiple different namespaces (e.g. SEED functions, K.O. Terms, Cog Classes, EGGnoggs) via the M5Nr.

The publication “Metagenomics-a guide from sampling to data analysis” (PMID 22587947) in Microbial Informatics and Experimentation, 2012 is a good review of best practices for experiment design for further reading.

License¶

Citing MG-RAST¶

http://www.biomedcentral.com/1471-2105/ 9/386

.

http://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1004008

.

Version history¶

Version 1¶

The original version of MG-RAST was developed in 2007 by Folker Meyer, Andreas Wilke, Daniel Paarman, Bob Olson, and Rob Edwards. It relied heavily on the SEED(Overbeek et al. 2005) environment and allowed upload of preprocessed 454 and Sanger data.

Version 2¶

Version 2, released in 2008, had numerous improvements. It was optimized to handle full-sized 454 datasets and was the first version of MG-RAST that was not fully SEED based. Version 2.0 used BLASTX analysis for both gene prediction and functional classification(Meyer et al. 2008).

Version 3¶

While version 2 of MG-RAST was widely used, it was limited to datasets smaller than a few hundred megabases, and comparison of samples was limited to pairwise comparisons. Version 3 is not based on SEED technology; instead, it uses the SEED subsystems as a preferred data source. Starting with version 3, MG-RAST moved to github.

Version 3.6¶

With version 3.6 MG-RAST was containerized, moving from a bare metal infrastructure to a set of docker containers running in a Fleet/SystemD/etcD environment.

Version 4¶

Version 4.0 brings a new web interface, fully relying on the API for data access and moves the bulk of the data stored from Postgres to Cassandra. The new web interface moves the data visualization burden from the web server to the clients machine, using Javascript and HTML5 heavily.

In version 4.0 we have moved the changed the backend store for profiles. While previous version stored a pre-computed mapping of observed abundances to functional or taxonomic categories, this is now computed on the fly. The number of profiles stored is reduced to the MD5 and LCA profiles. The API has been augmented to allow dynamic mapping to categories, to provide the required bandwidth we have migrated the profile store from Postgres to Cassandra.

The web interface of the previous version predated the API, the user interface for version 4.0 now uses the API. The web interface has been re-written in JavaScript/HTML5. Unlike previous version the web interface now is executed on the client (inside the browser) and now soupports any recent browser.

With version 4.04 we are switching the main web site to be mg-rast.org and are also turning on https by default. For a limited time, the unencrypted access protocols will remain available. We encourage all users to upgrade their bookmarks and also install upgraded versions of the CRAN package and/or the python tool suite. We also switched the similarity tool to Diamond(Buchfink, Xie, and Huson 2015).

Comparison of versions 2 and 3¶

Version 3 added the ability to analyze massive amounts of Illumina reads by introducing a significant number of changes to the pipeline and the underlying platform technology. In version 3 we introduced the notion of the API as the central component of the system.

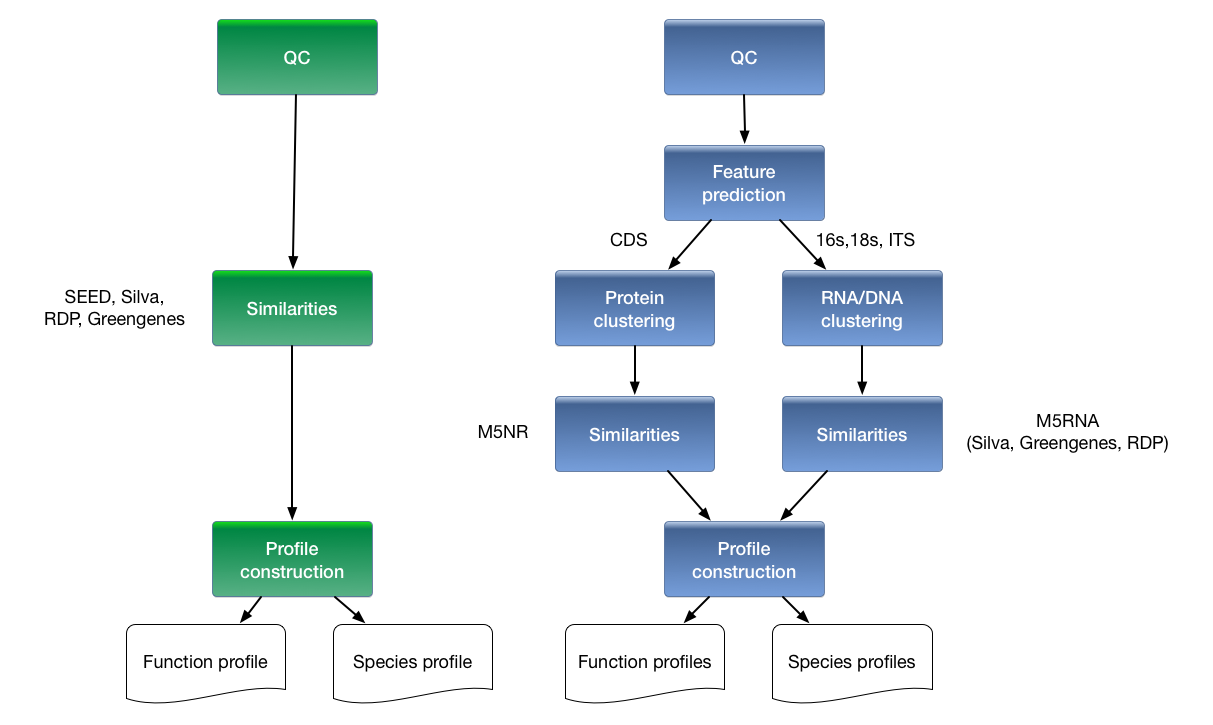

In the 3.0 version, datasets of tens of gigabases can be annotated, and comparison of taxa or functions that differ between samples is now limited only by the available screen real estate. Figure 1.1 shows a comparison of the analytical and computational approaches used in MG-RAST v2 and v3. The major changes are the inclusion of a dedicated gene-calling stage using FragGenescan (Rho, Tang, and Ye 2010), clustering of predicted proteins at 90% identified by using uclust (Edgar 2010), and the use of BLAT (Kent 2002) for the computation of similarities. Together with changes in the underlying infrastructure, this version has allowed dramatic scaling of the analysis with the limited hardware available.

Similar to version 2.0, the new version of MG-RAST does not pretend to know the correct parameters for the transfer of annotations. Instead, users are empowered to choose the best parameters for their datasets.

Comparison of versions 3 and 4¶

The roadmap for version 4 has a number of key elements that will be implemented step-by-step, currently the following features are implemented:

- New JavaScript web interface using the API

- Cassandra instead of Postgres as main data store for profiles

Overview of processing pipeline in (left) MG-RAST v2 and (right) MG-RAST v3. In the old pipeline, metadata was rudimentary, compute steps were performed on individual reads on a 40-node cluster that was tightly coupled to the system, and similarities were computed by BLAST to yield abundance profiles that could then be compared on a per sample or per pair basis. In the new pipeline, rich metadata can be uploaded, normalization and feature prediction are performed, faster methods such as BLAT are used to compute similarities, and the resulting abundance profiles are fed into downstream pipelines on the cloud to perform community and metabolic reconstruction and to allow queries according to rich sample and functional metadata.

The new version of MG-RAST represents a rethinking of core processes and data products, as well as new user interface metaphors and a redesigned computational infrastructure. MG-RAST supports a variety of user-driven analyses, including comparisons of many samples, previously too computationally intensive to support for an open user community.

Scaling to the new workload required changes in two areas: the underlying infrastructure needed to be rethought, and the analysis pipeline needed to be adapted to address the properties of the newest sequencing technologies.

The MG-RAST team¶

MG-RAST was started by Rob Edwards and Folker Meyer in 2007. The MG-RAST team has significantly expanded in the past few years. The team is listed below.

- Andreas Wilke

- Wolfgang Gerlach

- Travis Harrison

- William L. Trimble

- Folker Meyer

MG-RAST alumni¶

The following people were associated with MG-RAST in the past:

- Daniel Paarmann, 2007-2008

- Rob Edwards, 2007-2008

- Mike Kubal, 2007-2008

- Alex Rodriguez, 2007-2008

- Bob Olson, 2007-2009

- Daniela Bartels, 2007-2011

- Yekaterina Dribinsky, 2011

- Jared Wilkening, 2007-2013

- Mark D’Souza, 2007-2014

- Hunter Matthews 2009-2014

- Narayan Desai, 2011-2014

- Wei Tang, 2012-2015

- Daniel Braithwaite, 2012-2015

- Elizabeth M. Glass, 2008-2016

- Jared Bischof, 2010-2016

- Kevin Keegan, 2009-2016

- Tobias Paczian 2007 - 2018

Under the hood: The MG-RAST technology platform¶

The backend¶

While originally MG-RAST data was stored in a shared filesystem and a MySQL database, the backend store evolved with growing popularity and demand.

Currently a number of data stores are used to provide the underpinning for various parts of the MG-RAST API.

An approximate mapping of stores to functions in version 4.0 is provided in table [xtab:v4-stores-to-API].

| Function | data store | comment |

|---|---|---|

| Search | Apache, SOLR and elastic search | |

| Profiles | Cassandra and SHOCK | |

| M5NR | Cassandra | |

| Authentication | MySQL | |

| Project | MySQL | |

| Access control | MySQL | |

| Metadata | MySQL | |

| Files | SHOCK |

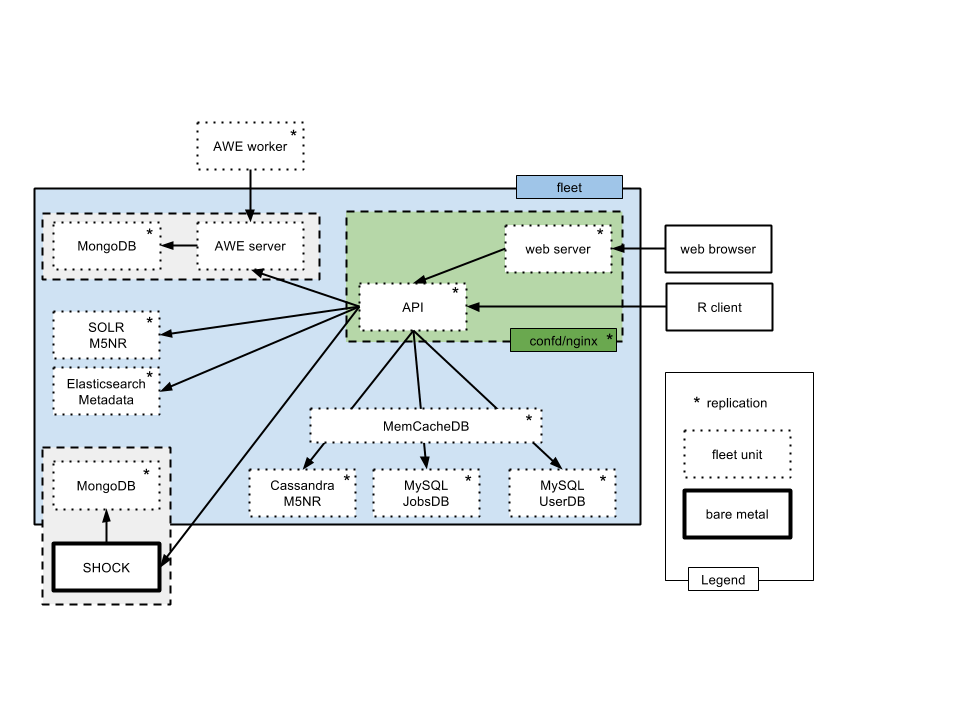

The backend infrastructure and the overall system layout is shown in figure 2.1.

Overview of the production system in mid 2016. Fleet is used to manage a number of containerized services (shown with dashed lines). Two services are provisioned outside the Fleet system: SHOCK (providing 0.7 Petabyte of storage) and a Postgres clusters. We note the significant number of different databases used to serve data required for the API.

As of version 3.6 the majority of the services are provisioned as containers, provisioned as a set of Fleet units described in https://github.com/MG-RAST/MG-RAST-infrastructure/tree/master/fleet-units.

The supporting technologies: Skyport, AWE and SHOCK¶

One key aspect of scaling MG-RAST to large numbers of modern NGS datasets is the use of cloud computing [2], which decouples MG-RAST from its previous dedicated hardware resources.

We use AWE (Wilke et al. 2011) an efficient, open source resource manager to execute the MG-RAST workflow. We expanded AWE to work with Linux containers forming the Skyport system (Gerlach et al. 2014). AWE and Skyport use RESTful interfaces thus allowing the addition of clients without the need to add firewall exceptions and/or massive system reconfiguration.

The main MG-RAST data store is the the SHOCK data management system (Wilke et al. 2015) developed alongside AWE. SHOCK like AWE relies on a RESTful interface instead of a more traditional shared file system.

When we introduced the technologies described above to replace a shared file system (Sun NFS mounted on several hundred nodes), we saw a speed up of a factor of 750x on identical hardware.

Data model¶

The MG-RAST data model (see Figure 2.2) has changed dramatically in order to handle the size of modern next-generation sequencing datasets. In particular, we have made a number of choices that reduce the computational and storage burden.

We note that the size of the derived data products for a next-generation dataset in MG-RAST is typically about 10x the size of the actual dataset. Individual datasets now may be as large as a terabase [3], with the on-disk footprint significantly larger than the basepair count because of the inefficient nature of FASTQ files, which basically double the on-disk size for FASTQ representations.

- Abundance profiles. Using abundance profiles, where we count the number of occurrences of function or taxon per metagenomic dataset, is one important factor that keeps the datasets manageable. Instead of growing the dataset sizes (often with several hundred million individual sequences per dataset), the data products now are more or less static in size.

- Single similarity computing step per feature type. By running exactly one similarity computation for proteins and another one for rRNA features, we have limited the computational requirements.

- Clustering of features. By clustering features at 90% identity, we reduce the number of times we compute on similar proteins. Abundant features will be even more efficiently clustered, leading to more compression for abundant species.

As shown in Figure 2.2, MG-RAST relies on abundance profiles to capture information for each metagenome. The following abundance profiles are calculated for every metagenome.

- MD5s – number of sequences (clusters) per database entry in the M5nr.

- functions – summary of all the MD5s that match a given function.

- ontologies – summary of all the MD5s that match a given hierarchy entry.

- organisms – summary of all MD5s that match a given taxon entry.

- lowest common ancestors

The static helper tables (show in blue in Figure [fig:mgrast_analysis-schema]) help keep the main tables smaller, by normalizing and providing integer representations for the entities in the abundance profiles.

THIS NEEDS TO BE REDONE!!!!!!

[fig:mgrast_analysis-schema]

The MG-RAST pipeline¶

MG-RAST provides automated processing of environmental DNA sequences via a pipeline. The pipeline has multiple steps that can be grouped into five stages:

We restrict the pipeline annotations to protein coding genes and ribosomal RNA (rRNA) genes.

- Data hygiene:Quality control and removal of artifacts.

- Feature extraction:Identification of protein coding and rRNA features (aka “genes”)

- Feature annotation:Identification of putative functions and taxonomic origins for each of the features

- Profile generation:Creation of multiple on disk representations of the information obtained above.

- Data loading:Loading the representations into the appropriate databases.

The pipeline shown in Figure 3.1 contains a significant number of improvements over previous versions and is optimized for accuracy and computational cost.

Using the M5nr (Wilke et al. 2012) (an MD5 nonredundant database), the new pipeline computes results against many reference databases instead of only SEED. Several key algorithmic improvements were needed to support the flood of user-generated data (see Figure [fig:mgrast-job-sizes]). Using dedicated software to perform gene prediction instead of using a similarity-based approach reduces runtime requirements. The additional clustering of proteins at 90% identity reduces data while preserving biological signals.

Below we describe each step of the pipeline in some detail. All datasets generated by the individual stages of the processing pipeline are made available as downloads. Appendix 11 lists the available files for each dataset.

Data hygiene¶

Preprocessing¶

After upload, data is preprocessed by using SolexaQA (Cox, Peterson, and Biggs 2010) to trim low-quality regions from FASTQ data. Platform-specific approaches are used for 454 data submitted in FASTA format: reads more than than two standard deviations away from the mean read length are discarded following (Huse et al. 2007). All sequences submitted to the system are available, but discarded reads will not be analyzed further.

Dereplication¶

For shotgun metagenome and shotgun metatranscriptome datasets we perform a dereplication step. We use a simple k-mer approach to rapidly identify all 20 character prefix identical sequences. This step is required in order to remove Artificial Duplicate Reads (ADRs) (Gomez-Alvarez, Teal, and Schmidt 2009). Instead of simply discarding the ADRs, we set them aside and use them later for error estimation.

We note that dereplication is not suitable for amplicon datasets that are likely to share common prefixes.

DRISEE¶

MG-RAST v3 uses DRISEE (Duplicate Read Inferred Sequencing Error Estimation) (Keegan et al. 2012) to analyze the sets of Artificial Duplicate Reads (ADRs) (Gomez-Alvarez, Teal, and Schmidt 2009) and determine the degree of variation among prefix-identical sequences derived from the same template. See Section 4.2 for details.

Screening¶

The pipeline provides the option of removing reads that are near-exact matches to the genomes of a handful of model organisms, including fly, mouse, cow, and human. The screening stage uses Bowtie (Langmead et al. 2009) (a fast, memory-efficient, short read aligner), and only reads that do not match the model organisms pass into the next stage of the annotation pipeline.

Note that this option will remove all reads similar to the human genome and render them inaccessible. This decision was made in order to avoid storing any human DNA on MG-RAST.

Feature identification¶

Protein coding gene calling¶

The previous version of MG-RAST used similarity-based gene predictions, an approach that is significantly more expensive computationally than de novo gene prediction. After an in-depth investigation of tool performance (Trimble et al. 2012), we have moved to a machine learning approach: FragGeneScan (Rho, Tang, and Ye 2010). Using this approach, we can now predict coding regions in DNA sequences of 75 bp and longer. Our novel approach also enables the analysis of user-provided assembled contigs.

We note that FragGeneScan is trained for prokaryotes only. While it will identify proteins for eukaryotic sequences, the results should be viewed as more or less random.

rRNA detection¶

An initial search using vsearch (???) against a reduced RNA database efficiently identifies ribosomal RNA. The reduced database is a 90% identity clustered version of the SILVA, Greengenes and RDP databases and is used to rapidly identify sequences with similarities to ribosomal RNA.

Feature annotation¶

Protein filtering¶

We indentify possibly protein coding regions overlapping ribosomal RNAs and exclude them from further processing.

AA clustering¶

MG-RAST builds clusters of proteins at the 90% identity level using the cd-hit (???) preserving the relative abundances. These clusters greatly reduce the computational burden of comparing all pairs of short reads, while clustering at 90% identity preserves sufficient biological signals.

Protein identification¶

Once created, a representative (the longest sequence) for each cluster is subjected to similarity analysis.

For rRNA similarities, instead of BLAST we use sBLAT, an implementation of the BLAT algorithm (Kent 2002), which we parallelized using OpenMP (Board 2011) for this work.

As of version 4.04 we have migrated to DIAMOND(Buchfink, Xie, and Huson 2015) to compute protein similarities against M5nr (Wilke et al. 2012). During computation protein and rRNA sequences are represented only via a sequenced derived identifier (an MD5 checksum). Once the computation completes, we generate a number of representations of the observed similarities for various purposes.

Once the similarities are computed, we present reconstructions of the species content of the sample based on the similarity results. We reconstruct the putative species composition of the sample by looking at the phylogenetic origin of the database sequences hit by the similarity searches.

Sequence similarity searches are computed against a protein database derived from the M5nr (Wilke et al. 2012), which provides nonredundant integration of many databases: GenBank,(Benson et al. 2013), SEED (Overbeek et al. 2005), IMG (Markowitz et al. 2008), UniProt (Magrane and Consortium 2011), KEGG (Kanehisa 2002), and eggNOGs (Jensen et al. 2008).

rRNA clustering¶

The rRNA-similar reads are then clustered at 97% identity using cd-hit, and the longest sequence is picked as the cluster representative.

rRNA identification¶

A BLAT similarity search for the longest cluster representative is performed against the M5rna database which integrates SILVA(Pruesse et al. 2007), Greengenes(DeSantis et al. 2006), and RDP(Cole et al. 2003).

Profile generation¶

In the final stage, the data computed so far is integrated into a number of data products. The most important one are the abundance profiles.

Abundance profiles represent a pivoted and aggregated version of the similarity files. We compute best hit, representative hit and LCA abundance profiles (see 4.5).

Database loading¶

In the final step the profiles are loaded into the respective databases.

MG-RAST data products¶

MG-RAST provices a number of data products in a variety of formats.

- Fasta and FastQSequence data can be downloaded via the API and web interface as Fasta (or FastQ) files

- JSONMetadata and Tables and other structured data can be downloaded via the APi or the web site in JSON format.

- SpreadsheetMetadata and Tables can be downloaded as spreadsheets via the web interface.

- SVG and PNGImages can be downloaded via the web site interface in SVG and PNG formast.

- BIOM v1BIOM (McDonald et al. 2012) files can be downloaded via the web interface for use with e.g., QIIME (Caporaso et al. 2010).

- Sequence dataThe originally submitted sequence data as well as the various subsets resulting from processing can be downloaded.

- Metadatadata describing data in GSC-compliant format.

Analysis results – results of running the MG-RAST pipeline. The list includes all intermediate data products and is intended to serve as a basis for further analysis outside the MG-RAST pipeline.

Details on the individual files are in Appendix 11.

Abundance profiles¶

Abundance profiles are the primary data product that MG-RAST’s user interface uses to display information on the datasets.

Using the abundance profiles, the MG-RAST system defers making a decision on when to transfer annotations. Since there is no well-defined threshold that is acceptable for all use cases, the abundance profiles contain all similarities and require their users to set cut-off values.

The threshold for annotation transfer can be set by using the following parameters: e-value, percent identity, and minimal alignment length.

The taxonomic profiles use the NCBI taxonomy. All taxonomic information is projected against this data. The functional profiles are available for data sources that provide hierarchical information. These currently comprise the following.

SEED Subsystems

The SEED subsystems(Overbeek et al. 2005) represent an independent reannotation effort that powers, for example, the RAST(Aziz et al. 2008) effort. Manual curation of subsystems makes them an extremely valuable data source.

Subsystems represent a four-level hierarchy:

- Subsystem level 1 – highest level

- Subsystem level 2 –

- Subsystem level 3 – similar to a KEGG pathway

- Subsystem level 4 – actual functional assignment to the feature in question

The page at http://pubseed.theseed.org/SubsysEditor.cgi allows browsing the subsystems.

KEGG Orthologs

We use the KEGG(Kanehisa 2002) enzyme number hierarchy to implement a four-level hierarchy.

- KEGG level 1 – first digit of the EC number (EC:X.*.*.*)

- KEGG level 2 – first two digits of the EC number (EC:X.Y.*.*)

- KEGG level 3 – first three digits of the EC number (EC:X:Y:Z:.*)

- KEGG level 4 – entire four digits EC number

We note that KEGG data is no longer available for free download. We thus have to rely on using the latest freely downloadable version of the data.

The high-level KEGG categories are as follows.

- Cellular Processes

- Environmental Information Processing

- Genetic Information Processing

- Human Diseases

- Metabolism

- Organizational Systems

COG and EGGNOG Categories

The high-level COG and EGGNOG categories are as follows.

- Cellular Processes

- Information Storage and Processing

- Metabolism

- Poorly Characterized

We note that for most metagenomes the coverage of each of the four namespaces is quite different. The “source hits distribution” (see Section [section:source-hits-distribution]) provides information on how many sequences per dataset were found for each database.

DRISEE profile¶

DRISEE (Keegan et al. 2012) is a method for measuring sequencing error in whole-genome shotgun metagenomic sequence data that is independent of sequencing technology and overcomes many of the shortcomings of Phred. It utilizes artificial duplicate reads (ADRs) to generate internal sequence standards from which an overall assessment of sequencing error in a sample is derived. The current implementation of DRISEE is not suitable for amplicon sequencing data or other samples that may contain natural duplicated sequences (e.g., eukaryotic DNA where gene duplication and other forms of highly repetitive sequences are common) in high abundance. DRISEE results are presented on the Overview page for each MG-RAST sample for which a DRISEE profile can be determined. Total DRISEE error presents the overall DRISEE-based assessment of the sample as a percent error:

where \({base\_errors}\) refers to the sum of DRISEE-detected errors and \({total\_bases}\) refers to the sum of all bases considered by DRISEE. Beneath the Total DRISEE Error, a barchart indicates the error for the sample (the red vertical bar) as well as the minimum (barchart initial value), maximum (barchart final value), mean \((\mu)\), mean +/- one standard deviation (\(\sigma\)), and mean +/- two standard deviations (\(2\sigma\)) Total DRISEE Errors observed among all samples in MG-RAST for which a DRISEE profile has been computed.

The DRISEE plot presents a more detailed view of the DRISEE profile; the DRISEE percent error is displayed per base. Individual errors (A,T,C,G, and N substitution rates as well as the InDel rate) are presented as well as a cumulative total.

Users can download DRISEE values as a tab-separated file. The first line of the file contains headers for the values in the second line. The second line contains DRISEE percent error values for A substitutions (A_err), T substitutions (T_err), C substitutions (C_err), G substitutions (G_err), N substitutions (N_err), insertions and deletions (InDel_err), and the Total DRISEE Error. The third line indicates headers for all remaining lines. Rows 4 and 4+ present the DRISEE counts for the indexed position across all considered bins of ADRs. Column values represent the number of reads that match an A,T,C,G,N, or InDel at the indicated position relative to the appropriate consensus sequence followed by the number of reads that do not match an A,T,C,G,N, or InDel.

Kmer profiles¶

kmer digests are an annotation-independent method for describing sequence datasets that can support inferences about genome size and coverage. Here the Overview page presents several visualizations, evaluated at k=15: the kmer spectrum, kmer rank abundance, and ranked kmer consumed. All three graphs represent the same spectrum, but in different ways. The kmer spectrum plots the number of distinct kmers against kmer coverage; the kmer coverage is equivalent to number of observations of each kmer. The kmer rank abundance plots the relationship between kmer coverage and the kmer rank—answering the question “What is the coverage of the nth most-abundant kmer?”. Ranked kmer consumed plots the largest fraction of the data explained by the nth most-abundant kmers only.

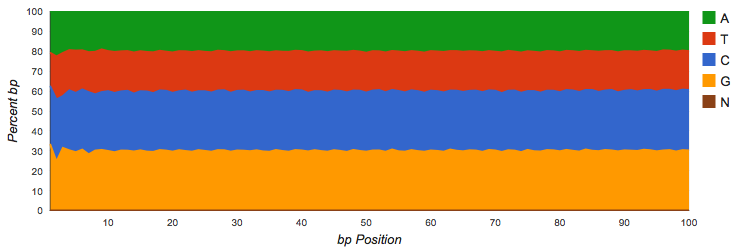

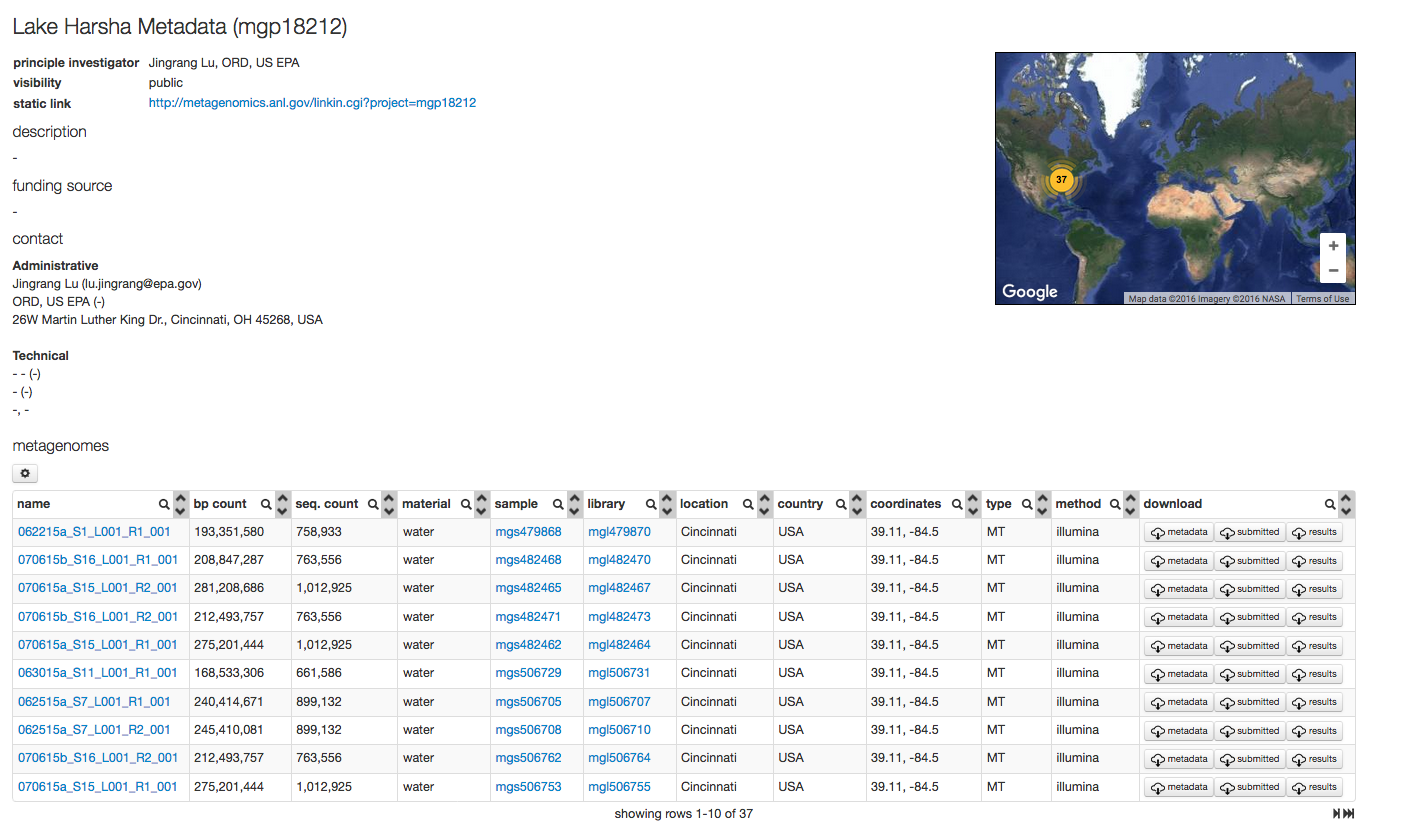

Nucleotide histograms¶

Nucleotide histograms are graphs showing the fraction of base pairs of each type (A, C, G, T, or ambiguous base “N”) at each position starting from the beginning of each read.

Amplicon datasets (see Figure 4.1) should show biased distributions of bases at each position, reflecting both conservation and variability in the recovered sequences:

Shotgun datasets should have roughly equal proportions of A, T, G and C basecalls, independent of position in the read as shown in Figure 4.2.

Vertical bars at the beginning of the read indicate untrimmed (see Figure 4.3), contiguous barcodes. Gene calling via FragGeneScan (Rho, Tang, and Ye 2010) and RNA similarity searches are not impacted by the presence of barcodes. However, if a significant fraction of the reads is consumed by barcodes, it reduces the biological information contained in the reads.

If a shotgun dataset has clear patterns in the data, these indicate likely contamination with artificial sequences. The dataset shown in see Figure 4.4 had a large fraction of adapter dimers.

Best hit, representative hit, and lowest common ancestor profiles¶

Mapping the similarities between the predicted protein coding and rRNA sequences to the databases results in files that map the predicted sequences against database entries (“SIM files”). In some cases sequences are identical between different database records, e.g. version of E. coli might share identical proteins and it becomes impossible to determine the “correct” organism name.

In those cases, the translation of those SIMS (that are against an anonymous database, with merely MD5 hashes used as identifiers; see M5NR) can be done in several different ways.

- best hit – using one organisms

- represenative hit – we pick a random member of the group of idential sequences, the strain you know to be in the sample might not be the representative, the counts are correct, no inflation. (this will ensure that your favorite strain is also listed, but leads to an inflation in the counts)

Figures 4.5 and 4.6 show the effects of using the best and representative hit strategies.

Selecting representative hit for mapping data from study mgp128 against Subsystems leads to inflated numbers.

MG-RAST searches the nonredundant M5nr and M5rna databases in which each sequence is unique. These two databases are built from multiple sequence database sources, and the individual sequences may occur multiple times in different strains and species (and sometimes genera) with 100% identity. In these circumstances, choosing the “right” taxonomic information is not a straightforward process.

To optimally serve a number of different use cases, we have implemented three methods–best hit, representative hit, and lowest common ancestor—for end users to determine the number of hits (occurrences of the input sequence in the database) reported for a given sequence in their dataset.

Best hit¶

The best hit classification reports the functional and taxonomic annotation of the best hit in the M5nr for each feature. In those cases where the similarity search yields multiple same-scoring hits for a feature, we do not choose any single “correct” label. For this reason we have decided to double count all annotations with identical match properties and leave determination of truth to our users. While this approach aims to inform about the functional and taxonomic potential of a microbial community by preserving all information, subsequent analysis can be biased because of a single feature having multiple annotations, leading to inflated hit counts. For users looking for a specific species or function in their results, the best hit classification is likely what is wanted.

Representative hit¶

The representative hit classification selects a single, unambiguous annotation for each feature. The annotation is based on the first hit in the homology search and the first annotation for that hit in our database. This approach makes counts additive across functional and taxonomic levels and thus allows, for example, the comparison of functional and taxonomic profiles of different metagenomes.

Lowest Common Ancestor (LCA)¶

To avoid the problem of multiple taxonomic annotations for a single feature, we provide taxonomic annotations based on the widely used LCA method introduced by MEGAN (Huson et al. 2007). In this method all hits are collected that have a bit score close to the bit score of the best hit. The taxonomic annotation of the feature is then determined by computing the LCA of all species in this set. This replaces all taxonomic annotations from ambiguous hits with a single higher-level annotation in the NCBI taxonomy tree.

Comparison of methods¶

Users should be aware that the number of hits might be inflated if the best hit filter is used or that a favorite species might be missing despite a similar sequence similarity result if the representative hit filter is used (in fact, even if a 100% identical match to a favorite species exists).

One way to consider both the best hit and representative hit is that they overinterpret the available evidence. With the LCA classifier function, on the other hand, any input sequence is classified only down to a trustworthy taxonomic level. While naively this seems to be the best function to choose in all cases because it classifies sequences to varying depths, the approach causes problems for downstream analysis tools that might rely on everything being classified to the same level.

Numbers of annotations vs. number of reads¶

The MG-RAST v3 annotation pipeline does not usually provide a single annotation for each submitted fragment of DNA. Steps in the pipeline map one read to multiple annotations and one annotation to multiple reads. These steps are a consequence of genome structure, pipeline engineering, and the character of the sequence databases that MG-RAST uses for annotation.

The first step that is not one-to-one is gene prediction. Long reads (\(>\) \(400\)bp) and contigs can contain pieces of two or more microbial genes; when the gene caller makes this prediction, the multiple predicted protein sequences (called fragments) are annotated separately.

An intermediate clustering step identifies sequences at 90% amino acid identity and performs one search for each cluster. Sequences that do not fall into clusters are searched separately. The “abundance” column in the MG-RAST tables presents the estimate of the number of sequences that contain a given annotation, found by multiplying each selected database match (hit) by the number of representatives in each cluster. The final step that is not one-to-one is the annotation process itself. Sequences can exist in the underlying data sources many times with different labels. When those sequence are the best hit similarity, we do not have a principled way to choosing the “correct” label. For this reason we have decided to double count these annotations and leave determination of truth to our users. Note: Even when considering a single data source, double-counting can occur depending on the consistency of annotations. Also note: Hits refer to the number of unique database sequences that were found in the similarity search, not the number of reads. The hit count can be smaller than the number of reads because of clustering or larger due to double counting.

Metadata¶

MG-RAST is both an analytical platform and a data integration system. To enable data reuse, for example for meta-analyses, we require that all data being made publicly available to third parties contain at least minimal metadata. The MG-RAST team has decided to follow the minimal checklist approach used by the Genomics Standards Consortium (GSC)(Field et al. 2011).

While the GSC provides a GCDML (R. et al. 2008) encoding, this XML-based format is more useful to programmers than to end users submitting data. We have therefore elected to use spreadsheets to transport metadata. Specifically we use MIxS (Minimum information about any (x) sequence (MIxS) and MIMARKS (Minimum Information about a MARKer gene Survey) to encode minimal metadata (Yilmaz et al. 2010).

The metadata describe the origins of samples and provide details on the generation of the sequence data. While the GSC checklist aims at capturing a minimum of information, MG-RAST can handle additional metadata if supplied by the user. The metadata is stored in a simple key value format and is displayed on the Metagenome Overview page.

Once uploaded, the metadata spreadsheets are validated automatically, and users are informed of any problems.

The presence of metadata enables discovery by end users using contextual metadata. Users can perform searches such as “retrieve soil samples from the continental U.S.A.” If the users have added additional metadata (domain specific extension), additional queries are enabled: for example, “restrict the results to soils with a specific pH”.

The version 4.0 web interface¶

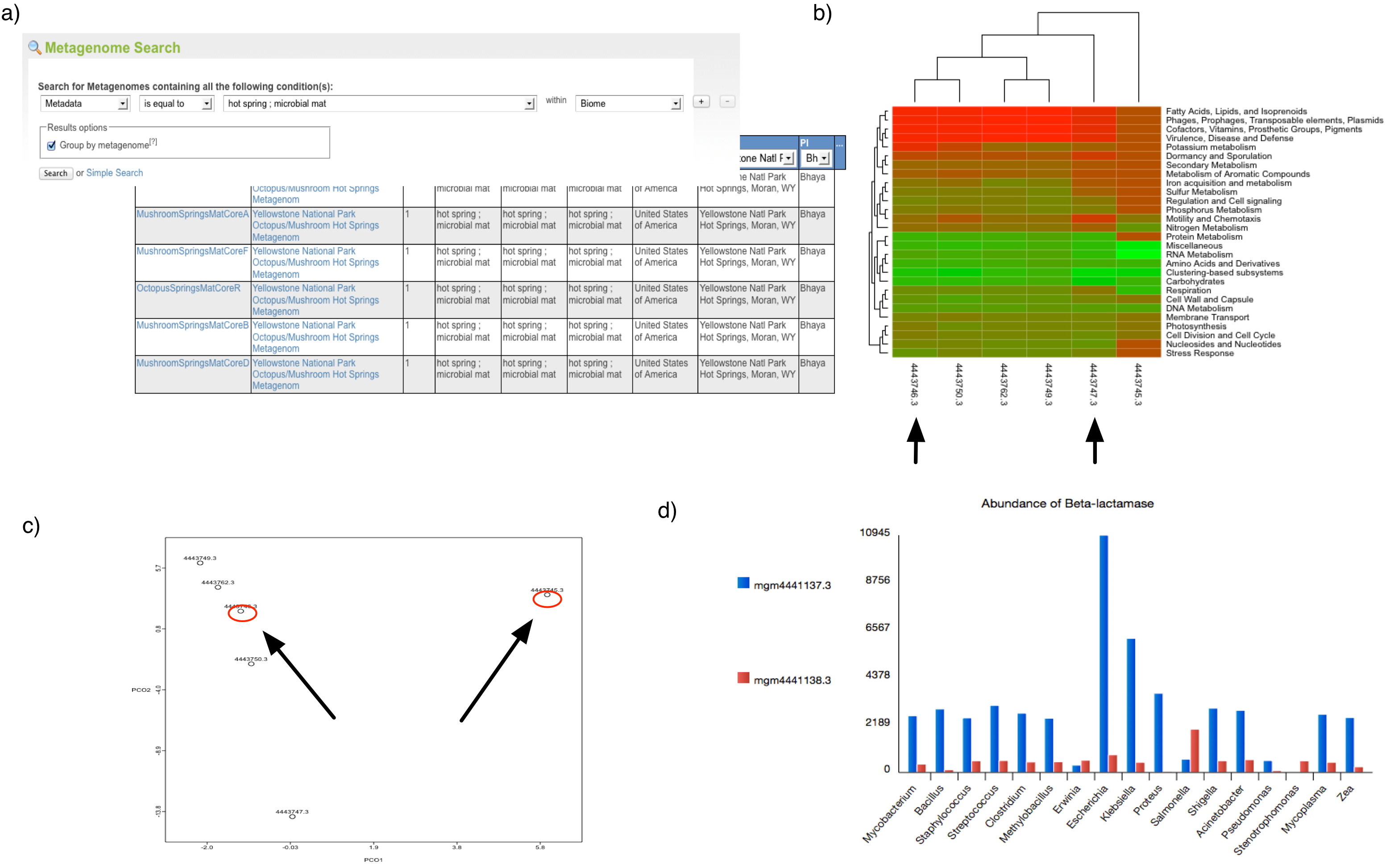

(a) Using the web interface for a search of metagenomes for microbial mats in hotsprings (GSC-MIMS-Keywords Biome=“hotspring; microbial mat”), we find 6 metagenomes (refs: 4443745.3, 4443746.3, 4443747.3, 4443749.3, 4443750.3, 4443762.3). (b) Initial comparison reveals some differences in protein functional class abundance (using SEED subsystens level 1). (c) From the PCoA plot using normalized counts of functional SEED Subsystem–based functional annotations (level 2) and Bray-Curtis as metric, we attempt to find differences between two similar datasets (MG-RAST-IDs: 444749.3, 4443762.3). (d) Using exported tables with functional annotations and taxonomic mapping, we analyze the distribution of organisms observed to contain beta-lactamase and plot the abundance per species for two distinct samples.

Figure 5.1 shows a sample analysis with MG-RAST.

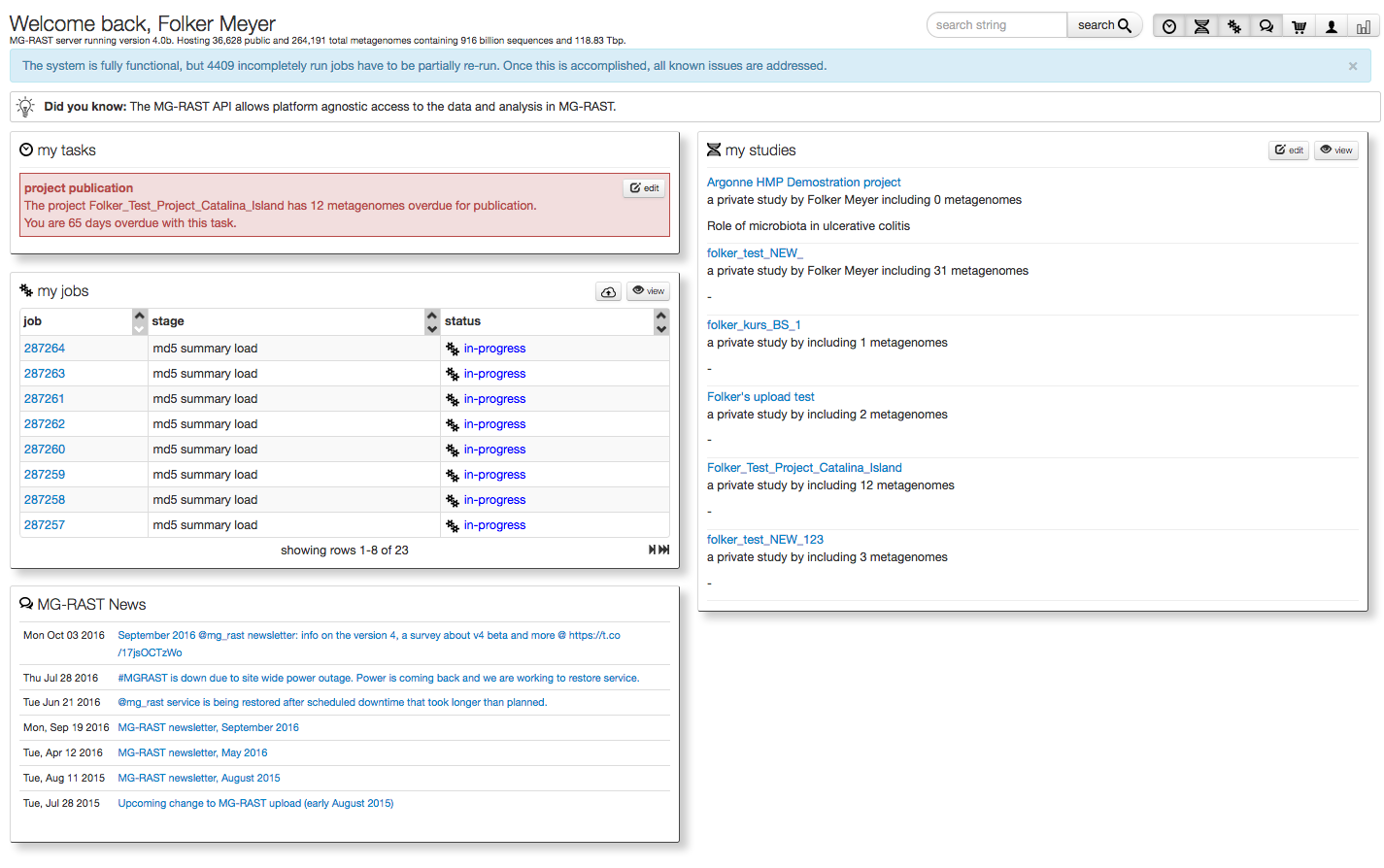

The “My Data” page¶

After login the user is directed to their personal “My Data” page (see figure 5.2), their personal MG-RAST homepage.

This page is provides information on data sets currently being processed, data sets owned by the user as well as any upcoming tasks for the users (i.e. release data to the public after the expiration of the quarantine period).

The page shows currently running jobs, the tasks the user needs to perform in the system, a list of their studies and more.

In addition to the data items mentioned above, the page also contains a list of the collections (see [Collections]) owned by the user.

Browsing, searching and viewing studies¶

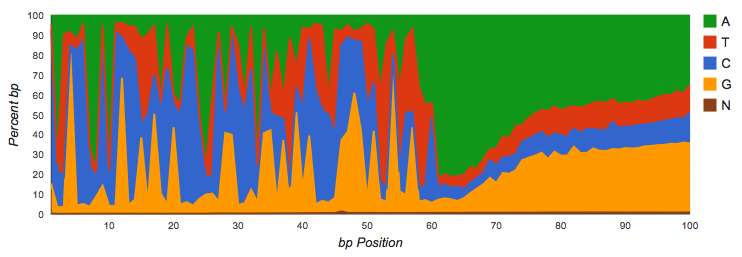

The search page¶

The search page lists all available metagenomic data sets and allows filtering. The looking glass symbol provides access to the search page, there are also shortcuts to the search function on multiple pages.

The basic function of the Search page is to find data sets that (1) contain a search string in the metadata (dataset name, project name, project description, GSC metadata), (2) contain specific functions (e.g., SEED functional roles, SEED subsystems, or GenBank annotations), or (3) contain specific organisms. The default search uses all three kinds of data.

In addition to a Google-like search that searches all data fields, we provide specialized searches in one of the three data types.

We note that due to data visibility (see [section:data-visibility]) not all data sets are visible to all users.

The search page has two components, the output widget (see figure 5.3) and the refinement widget.

The refinement widget allows filtering, the creation of saved searches and the creation of collections.

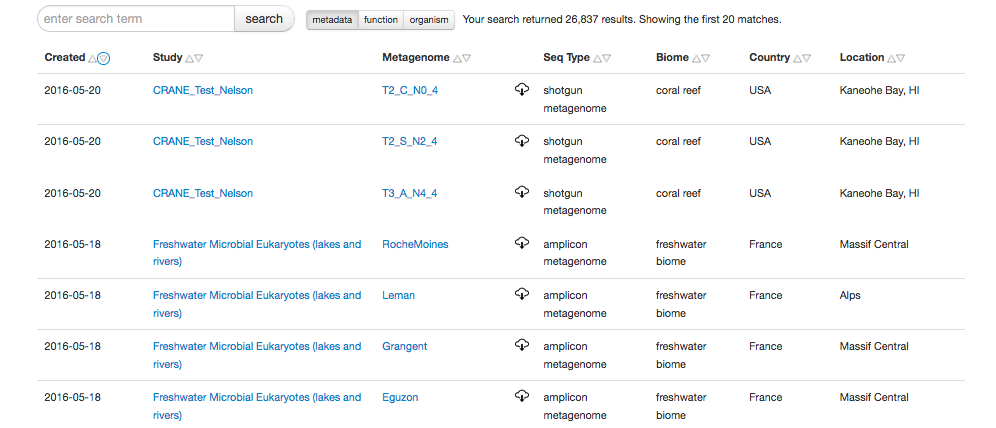

The study page¶

Data in MG-RAST is organized in studies (formerly known as Projects), each study has an automatically generated page.

The study page displays a project title, project description and other study specific information such as funding information. Users are encouraged to provide information on the project in addition to the metadata. The study page also includes the ability to display analysis results generated with the MG-RAST user interface.

The study page provides a number of tools to the data set owner:

- SharingStudies in MG-RAST while initially private (see [section:data-visibility]) can be shared with others. Simply provide any email address for an individual and they will be send a token that allows data access. Sharing is intended to allow pre-publication data sharing.

- Reviewer accessReviewer access tokens can be embedded in Manuscripts (or their cover letters) to allow reviewers and editors access to the data sets.

- Data PublicationData can be made public. This option will generate the only kind of identifiers that should be used in publications.

- Metadata editorComplete or correct the metadata.

Information about specific data sets (Overview page)¶

MG-RAST automatically creates an individual summary page for each dataset. This metagenome overview page provides a summary of the annotations for a single dataset. The page is made available by the automated pipeline once the computation is finished. The page is generated using default values for annotation transfer parameters (e.g. e-values) and thus likely does not represent good biological information, for that please use the Analysis page (see below).

However the Overview page is a good starting point for looking at a particular dataset. It provides a significant amount of information on technical details and biological content.

- Amplicon metagenome overview page

- Shotgun metagenome overview page

- Assembled shotgun metagenome overview page

- Metatranscriptome overview page

While the different types of overview pages are mostly identical, some visualizations are not relevant or even possible for certain data types. The decision which type of page to display is made based on the data, not the metadata provided by the user.

Previous version of MG-RAST provided almost complete download access to the underlying data, with version 4.0 we have expanded that to all tables and figures. The symbol shown in Figure 5.5.

The download symbol, providing access to the API call and the data in a variety of formats. The (i) displays the API call used to generate the respective data and the pull down menu provides access in variety of formats.

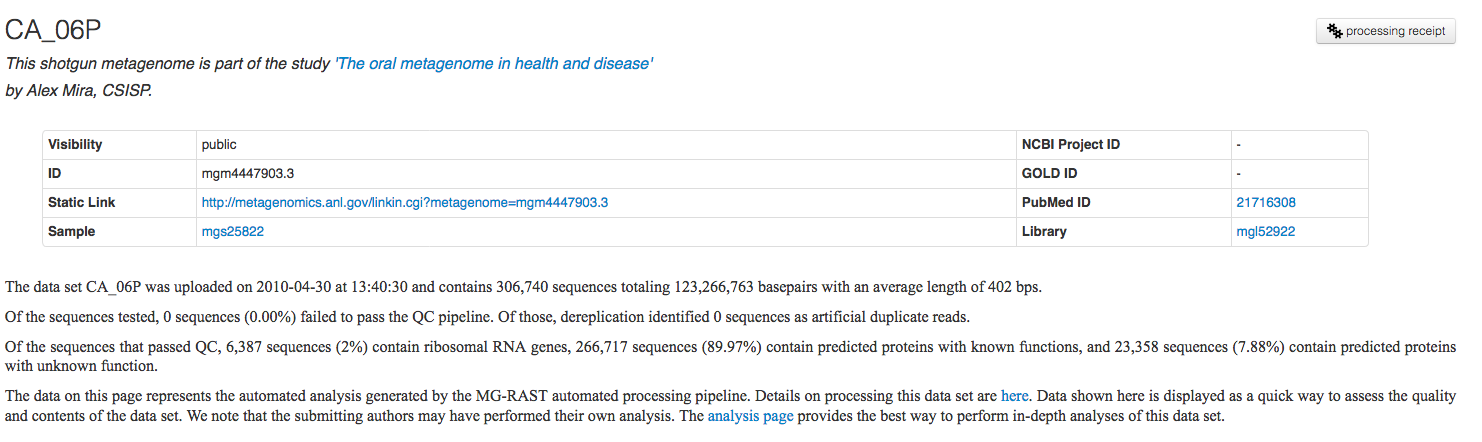

The Overview page provides the MG-RAST ID for a data set, a unique identifier that is usable as accession number for publications. Additional information such as the name of the submitting PI and organization and a user-provided metagenome name are displayed at the top of the page as well. A static URL for linking to the system that will be stable across changes to the MG-RAST web interface is provided as additional information (Figure 5.7).

Please note: Until the data is released to the public, temporary

identifiers are made available that will be replaced by permanent valid

IDs at the time of data release. The temporary identifiers are long

numbers used to represent the data sets until they are public. Do not

use temporary identifiers in publications as they are designed to change

over time. An example for a temporary ID is

4fbfe5d4216d676d343733343339372e33. A valid MG-RAST identifier is

mgm4447101.3. Both the API and the web site work with temporary IDs

and MG-RAST IDs.

The results on the Overview page (e.g. link) represent a quick summary of the biological and technical content of each data set. In the past we use a relatively simple approach (best-hit) to compute the biological information. Our reasoning was based on the fact that the “real” meaningful data was presented via the Analysis Page.

With version 4.04 we are now presenting an updated Overview page, results on this page are based on the lowest common ancestor (LCA) algorithm (see Figure 5.6). The LCA (or most recent common ancestor) for a given DNA sequence is computed by evaluating the set of similarities observed when matching the sequence against a number of databases.

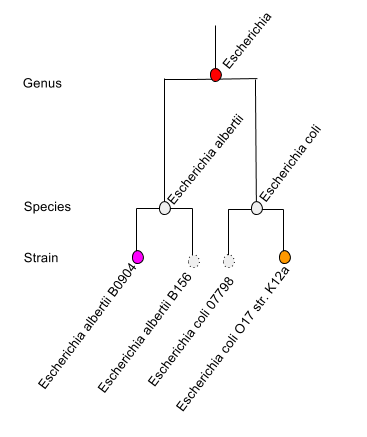

To put this in very simple language, when faced with uncertainty about which species to choose (e.g. when faced with a protein shared by many E. coli species), the MG-RAST Overview page will display a genus level result Escherichia (one level up from species). Likewise if no decision can be made between Escherichia and Shigella (both genera), the LCA will be set to Enterobacteriaceae.

Faced with a decision between multiple strain level hits (purple and orange) for different species, the LCA algorithm will pick higher (genus) level entity.

We note that this will change results for some data sets and cause the analysis pages to look differently, the underlying sequence analysis however is not affected, we merely set a new default value for the generation of overview graphs on this page.

Repeat: The scientific results (presented via the Analysis page) for download or comparison are not affected.

Additional reading: https://en.wikipedia.org/wiki/Most_recent_common_ancestor .

We point the readers attention to the download symbols next to each figure and or table, providing access to the data and API calls underlying each display item.

We provide an automatically generated paragraph of text describing the submitted data and the results computed by the pipeline. By means of the project information we display additional information provided by the data submitters at the time of submission or later.

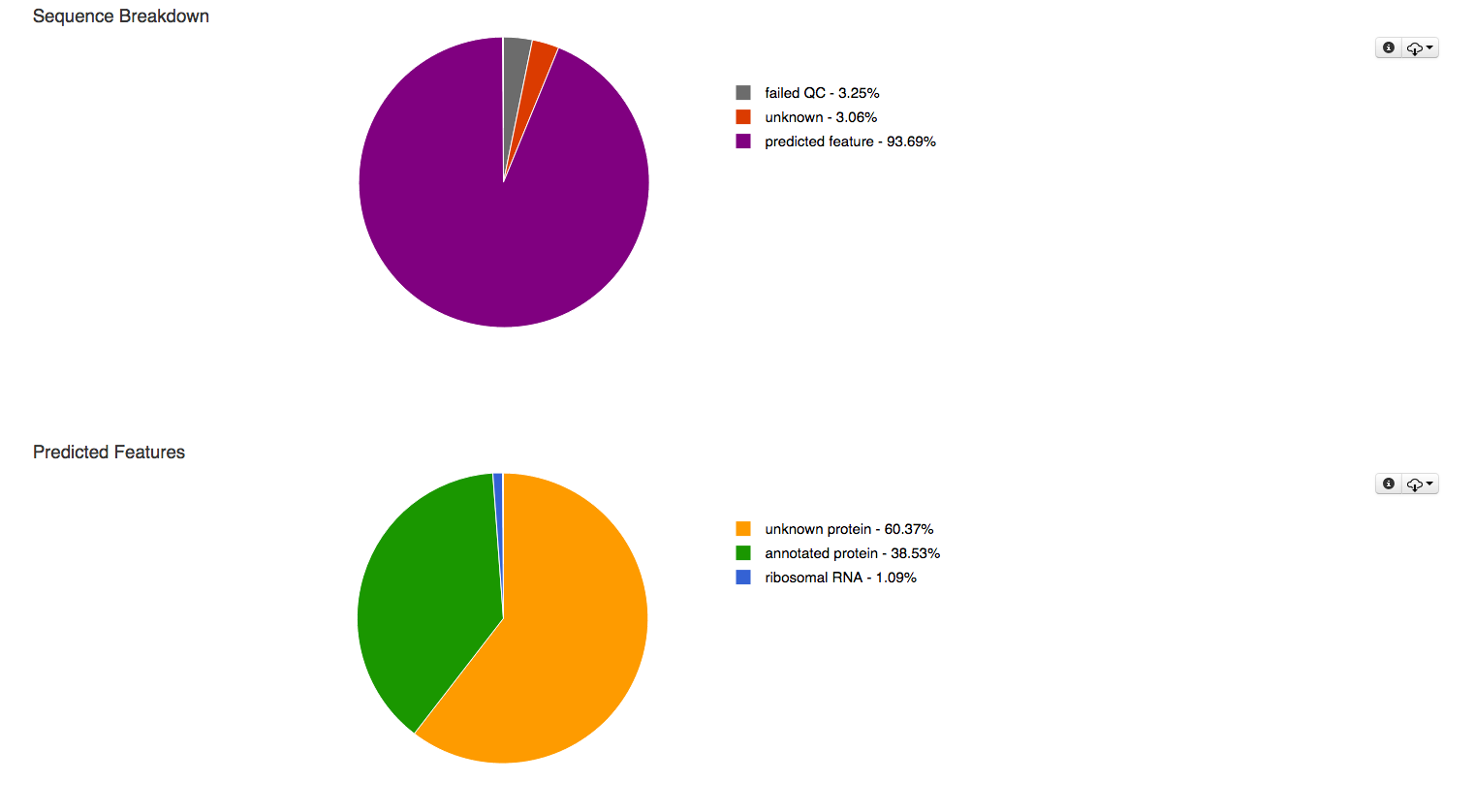

Sequence and feature breakdown¶

One of the first places to look at for each data set are the function and feature breakdown at the top of each overview page.

The pie charts at the top of the overview page (Figure 5.8) classify the submitted sequences submitted into several categories according to their QC results, sequences are classified as having failed QC (grey), containing at least one feature (purple) and unknown if they do not contain any recognized feature (red). In addition the predicted features are broken up into unknown protein (yellow), annotated protein (green) and ribosomal RNA (blue) in a second pie chart.

The first pie charts classifies the sequences submitted in this data set according to their QC results, the 2nd breaks down the detected features in to several categories.

What about other feature types?¶

We note that for performance reasons no other sequence features are annotated by the default pipeline. Other feature types such as small RNAs or regulatory motifs (e.g., CRISPRs (Bolotin et al. 2005)) not only will require significantly higher computational resources but also are frequently not supported by the unassembled short reads that constitute the vast majority of todays metagenomic data in MG-RAST. The quality of the sequence data coming from next-generation instruments requires careful design of experiments, lest the sensitivity of the methods is greater than the signal-to-noise ratio the data supports.

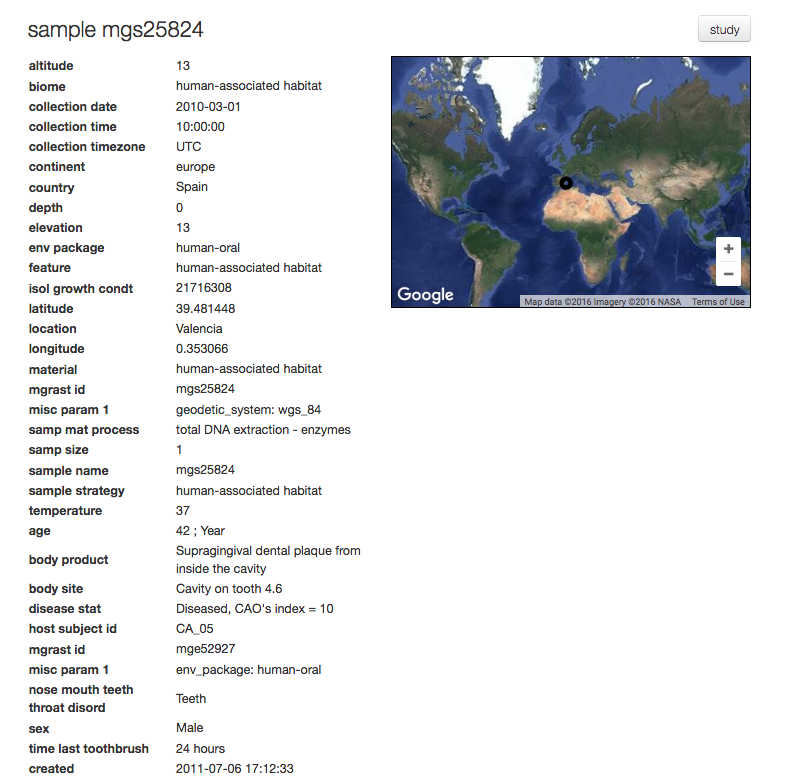

Metadata¶

The overview page also provides metadata for each dataset to the extent that such information has been made available. Metadata enables other researchers to discover datasets and compare annotations. MG-RAST requires standard metadata for data sharing and data publication. This is implemented using the standards developed by the Genomics Standards Consortium. Figure 5.9 shows the metadata summary for a dataset.

All metadata stored for a specific dataset is available in MG-RAST; we merely display a standardized subset in this table. A link at the bottom of the table (“More Metadata”) provides access to a table with the complete metadata. This enables users to provide extended metadata going beyond the GSC minimal standards. A mechanism to provide community consensus extensions to the minimal checklists and the environmental packages are explicitly encouraged but not required when using MG-RAST.

Functional and taxonomic breakdowns¶

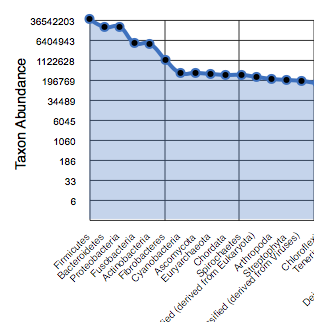

A number of pie charts are computed, represening a breakdown of the data into different taxonomic ranks (domain, phylum, class, order, family, genus) an the top levels of the four supported controlled annotation namespaces (Subsystems, Kegg Orthologues (KOGS), COGs and Eggnogs (NOGS)).

Rank abundance¶

The rank abundance plot (Figure 5.10) provides a rank-ordered list of taxonomic units at a user-defined taxonomic level, ordered by their abundance in the annotations.

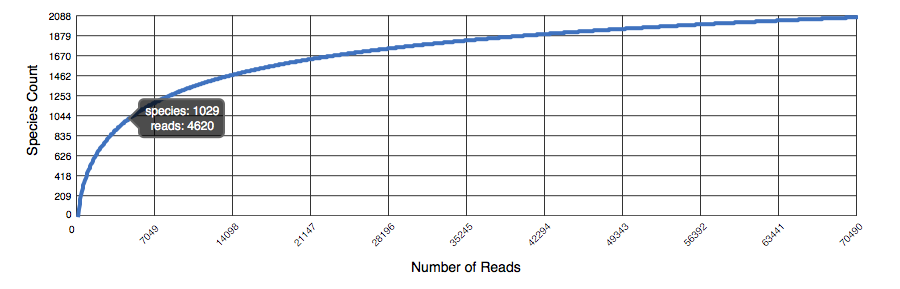

Rarefaction¶

The rarefaction curve of annotated species richness is a plot (see Figure 5.11 of the total number of distinct species annotations as a function of the number of sequences sampled. The slope of the right-hand part of the curve is related to the fraction of sampled species that are rare. On the left, a steep slope indicates that a large fraction of the species diversity remains to be discovered. If the curve becomes flatter to the right, a reasonable number of individuals is sampled: more intensive sampling is likely to yield only few additional species. Sampling curves generally rise quickly at first and then level off toward an asymptote as fewer new species are found per unit of individuals collected.

Rarefaction plot showing a curve of annotated species richness. This curve is a plot of the total number of distinct species annotations as a function of the number of sequences sampled.

The rarefaction curve is derived from the protein taxonomic annotations and is subject to problems stemming from technical artifacts. These artifacts can be similar to the ones affecting amplicon sequencing (Reeder and Knight 2009), but the process of inferring species from protein similarities may introduce additional uncertainty.

Alpha diversity¶

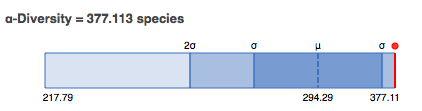

In this section we display an estimate of the alpha diversity based on the taxonomic annotations for the predicted proteins. The alpha diversity is presented in context of other metagenomes in the same project (see Figure 5.12).

Alpha diversity plot showing the range of \(\alpha\)-diversity values in the project the data set belongs to. The min, max, and mean values are shown, with the standard deviation ranges (\(\sigma\) and \(2\sigma\)) in different shades. The \(\alpha\)-diversity of this metagenome is shown in red.

The alpha diversity estimate is a single number that summarizes the distribution of species-level annotations in a dataset. The Shannon diversity index is an abundance-weighted average of the logarithm of the relative abundances of annotated species.

We compute the species richness as the antilog of the Shannon diversity:

where \(p_i\) are the proportions of annotations in each of the species categories. Shannon species richness has units of the “effective number of species”. Each \(p\) is a ratio of the number of annotations for each species to the total number of annotations. The species-level annotations are from all the annotation source databases used by MG-RAST. The table of species and number of observations used to calculate this diversity estimate can be downloaded under “download source data” on the Overview page.

Functional categories¶

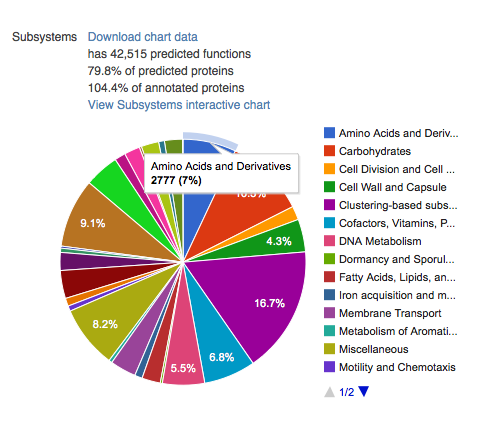

This section contains four pie charts providing a breakdown of the functional categories for KEGG (Kanehisa 2002), COG (Tatusov et al. 2003), SEED Subsystems (Overbeek et al. 2005), and eggNOGs (Jensen et al. 2008). The relative abundance of sequences per functional category can be downloaded as a spreadsheet, and users can browse the functional breakdowns via the Krona tool (Ondov, Bergman, and Phillippy 2011) integrated in the page.

A more detailed functional analysis, allowing the user to manipulate parameters for sequence similarity matches, is available from the Analysis page.

The sample page¶

For each sample MG-RAST displays a sample page shown in figure 5.14, the page displays all sample specific information. The information on this page is derived from the metadata.

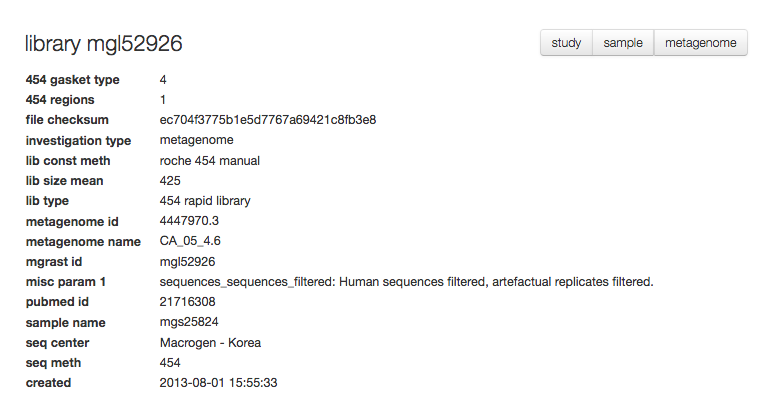

The library page¶

For each set of sequences underlying a data set (“a library”) MG-RAST provides a specific page with information extracted from the metadata.

The analysis page – Comparing data, extracting and downloading data¶

The Analysis page is the core of the MG-RAST system, it consumes the various profiles and allows adjusting of parameters.

. It provides a number of tools to compare data sets with different parameters as well as the ability to drill down into the data (e.g. selecting Actinobacteria or features related to a specific functional gene group (e.g. the Lysine Biosynthesis Subsystem).

Compared to previous version of MG-RAST the Analysis page has seen significant improvements, here we provide a step-by-step guide to using the page

Download profiles to local machine for analysis¶

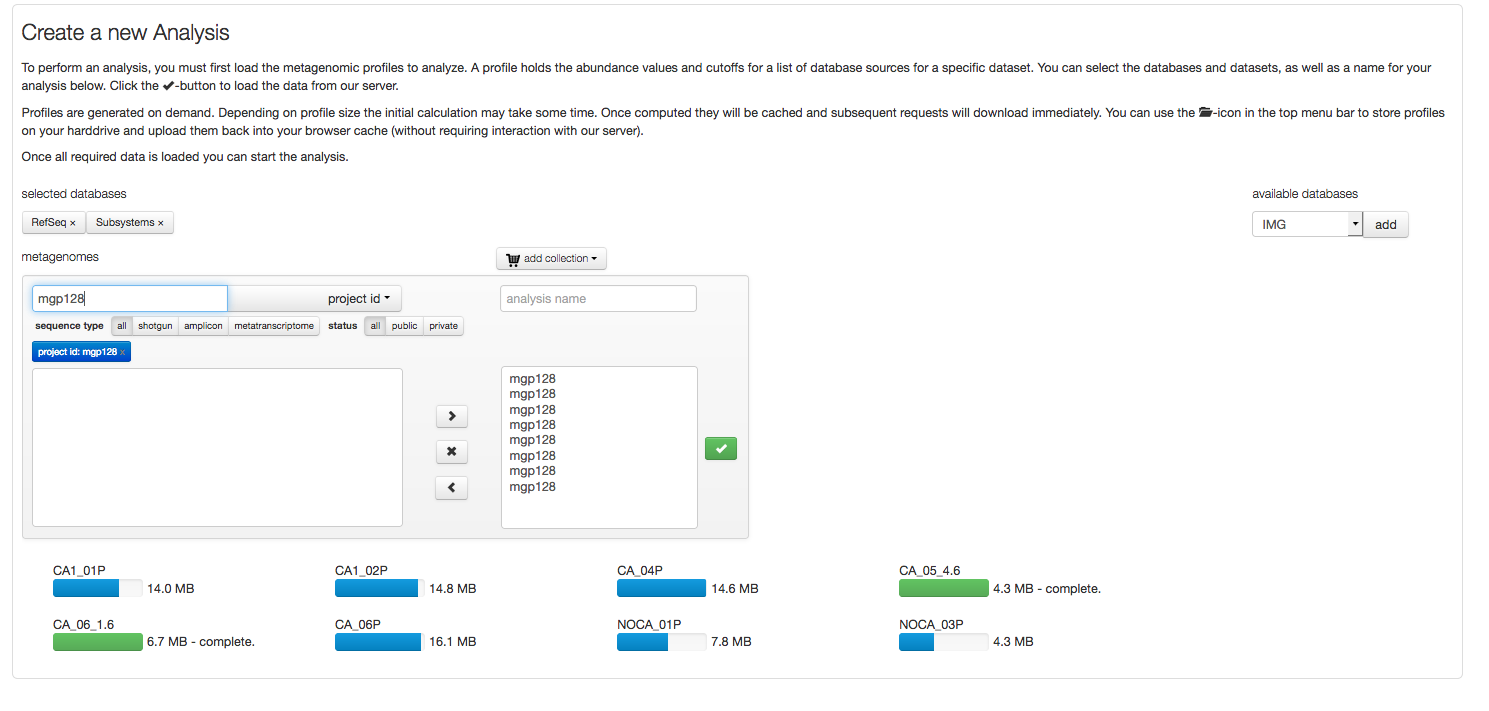

Profiles to be compared, analyzed or visualized need to be downloaded. Figure 5.16 shows an example download of 8 profiles.

After selecting a project (“mgp128”) the Refseq and Subsystem profiles for the respective data sets are loaded. Blue progress bars indicated profiles being uploaded, green bars indicate the download has completed.

After the profiles have been downloaded, the analysis is no longer dependent on the MG-RAST server resources, instead using the computer the browser is running on. This is achieved via the JavaScript functionality in your browser (please make sure its enabled). Also data is stored in memory, providing you with a good reason to maximize the memory (RAM) of the machine you are running the analysis on.

Normalization¶

Normalization refers to a transformation that attempts to reshape an underlying distribution. MG-RAST now uses DEseq, which is an R package to analyse count data from high-throughput sequencing assays. DESeq, as it has been shown to outperform other methods of normalization - in particular, those that use any sort of linear scaling.

Standardization is a transformation applied to each distribution in a group of distributions so that all distributions exhibit the same mean and the same standard deviation. This removes some aspects of intersample variability and can make data more comparable. This sort of procedure is analogous to commonly practiced scaling procedures but is more robust in that it controls for both scale and location.

The Analysis page calculates the ordination visualizations with either raw or normalized counts, at the user’s option. The normalization procedure is as follows.

\(normalized\_value\_i = log2(raw\_counts\_i + 1)\)

The standardized values then are calculated from the normalized values by subtracting the mean of each sample’s normalized values and dividing by the standard deviation of each sample’s normalized values.

\(standardized\_i = (normalized\_i - mean(normalized\_i)) / stddev(normalized\_i)\)

More about these procedures is available in a number of texts. We recommend Terry Speed’s “Statistical Analysis of Gene Expression in Microarray Data” (Speed 2003).

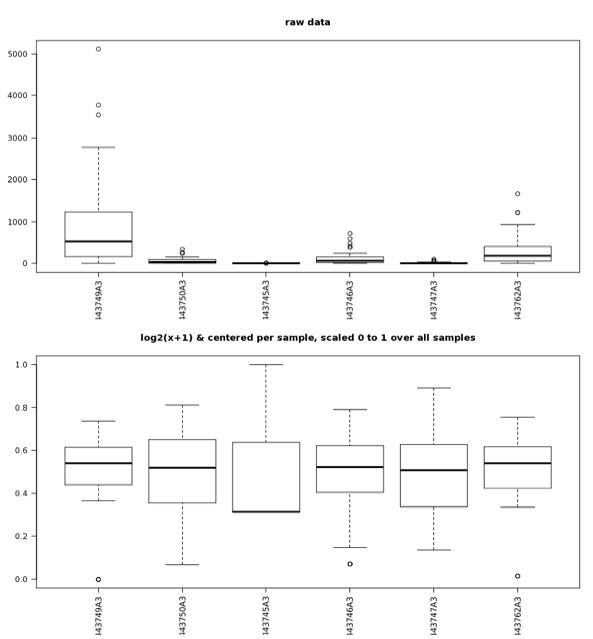

When data exhibit a nonnormal, normal, or unknown distribution, nonparametric tests (e.g., Man-Whitney or Kurskal-Wallis) should be used. Boxplots are easy to use, and the MG-RAST analysis page provides boxplots of the standardized abundance values for checking the comparability of samples (Figure 5.17).

Boxplots of the abundance data for raw values (top) as well as values that have undergone the normalization and standardization procedures (bottom) described in the text. After normalization and standardization, samples exhibit value distributions that are much more comparable and that have a normal distribution; the normalized and standardized data are suitable for analysis with parametric tests; the raw data are not.

Rarefaction¶

The rarefaction view is available only for taxonomic data. The rarefaction curve of annotated species richness is a plot (see Figure 5.18) of the total number of distinct species annotations as a function of the number of sequences sampled. As shown in Figure 5.18, multiple data sets can be included.

The slope of the right-hand part of the curve is related to the fraction of sampled species that are rare. When the rarefaction curve is flat, more intensive sampling is likely to yield only a few additional species. The rarefaction curve is derived from the protein taxonomic annotations and is subject to problems stemming from technical artifacts. These artifacts can be similar to the ones affecting amplicon sequencing (Reeder and Knight 2009), but the process of inferring species from protein similarities may introduce additional uncertainty.

On the Analysis page the rarefaction plot serves as a means of comparing species richness between samples in a way independent of the sampling depth.

Rarefaction plot showing a curve of annotated species richness. This curve is a plot of the total number of distinct species annotations as a function of the number of sequences sampled.

On the left, a steep slope indicates that a large fraction of the species diversity remains to be discovered. If the curve becomes flatter to the right, a reasonable number of individuals is sampled: more intensive sampling is likely to yield only a few additional species.

Sampling curves generally rise very quickly at first and then level off toward an asymptote as fewer new species are found per unit of individuals collected. These rarefaction curves are calculated from the table of species abundance. The curves represent the average number of different species annotations for subsamples of the the complete dataset.

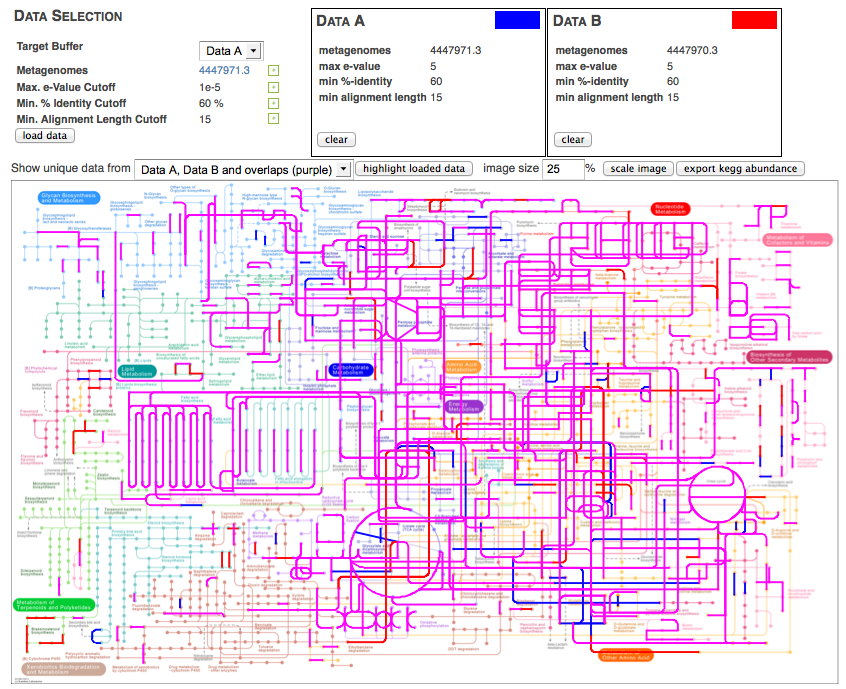

KEGG mapper¶

The KEGG map tool allows the visual comparison of predicted metabolic pathways in metagenomic samples. It maps the abundance of identified enzymes onto a KEGG (Kanehisa 2002) map of functional pathways; note that the mapper is available only for functional data). Users can select from any available KEGG pathway map. Different colors indicate different metagenomic datasets.

The KEGG mapper works by providing two buffers that users can assign datasets to. After loading the buffers with the intended datasets, the KEGG mapper can highlight parts of the KEGG map that are present in the dataset. Several combinations of the two datasets can be displayed, as shown in Figure 5.19. Metagenomes can be assigned into one of two groups, and those groups can be visually compared (see Figure 5.20).

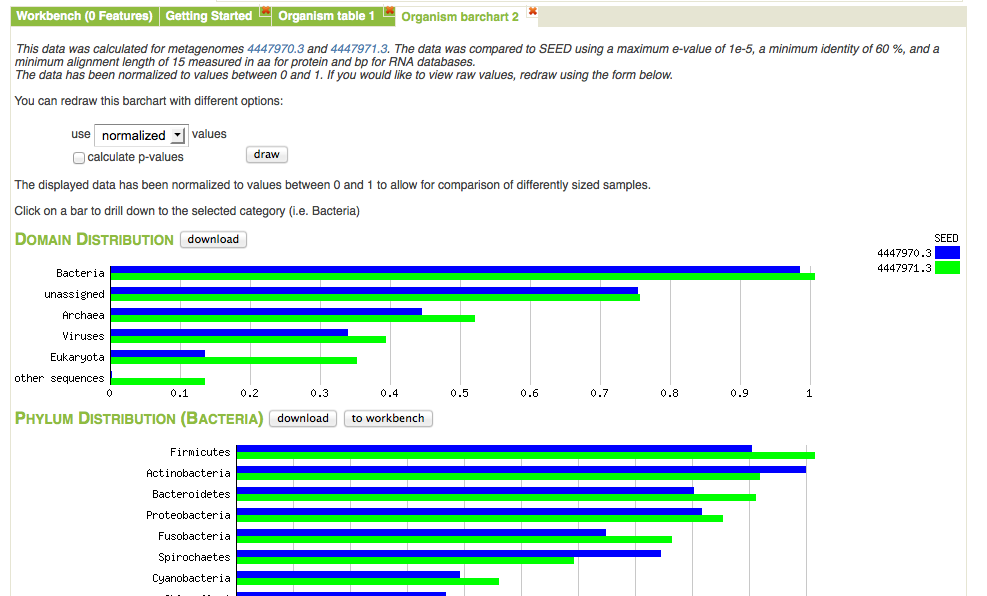

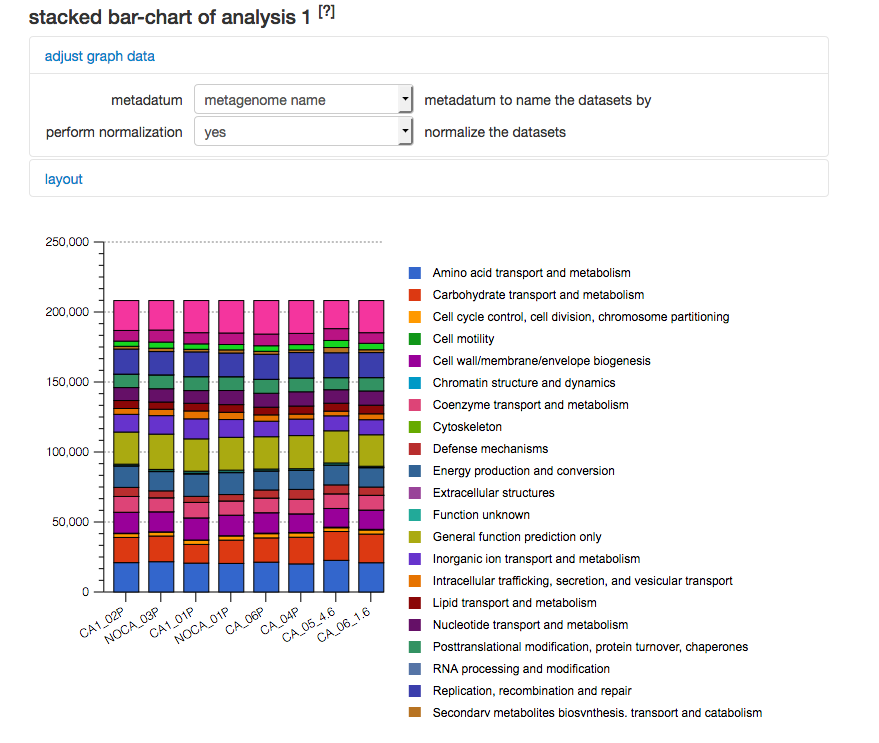

Bar charts¶

Bar chart view comparing normalized abundance of taxa. We have expanded the Bacteria domain to display the next level of the hierarchy.

Figure 5.21 shows the bar chart visualization option on the Analysis page. One important property of the page is the built-in ability to drill down by clicking on a specific category. In this example we have expanded the domain Bacteria to show the normalized abundance (adjusted for sample sizes) of bacterial phyla. The abundance information displayed can be downloaded into a local spreadsheet. Once a subselection has been made (e.g., the domain Bacteria selected).

Heatmap/Dendrogram¶

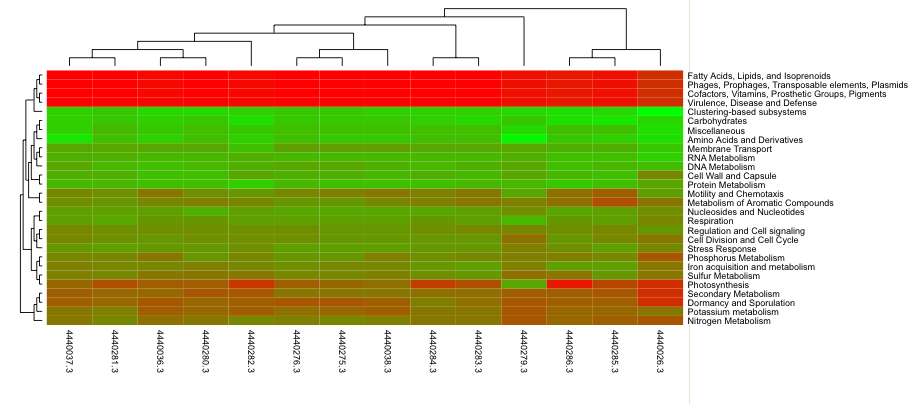

Heatmap/dendogram example in MG-RAST. The MG-RAST heatmap/dendrogram has two dendrograms, one indicating the similarity/dissimilarity among metagenomic samples (x axis dendrogram) and another indicating the similarity/dissimilarity among annotation categories (e.g., functional roles; the y-axis dendrogram).

The heatmap/dendrogram (Figure 5.22) allows an enormous amount of information to be presented in a visual form that is amenable to human interpretation. Dendrograms are trees that indicate similarities between annotation vectors. The MG-RAST heatmap/dendrogram has two dendrograms, one indicating the similarity/dissimilarity among metagenomic samples (x-axis dendrogram) and another indicating the similarity/dissimilarity among annotation categories (e.g., functional roles; the y-axis dendrogram). A distance metric is evaluated between every possible pair of sample abundance profiles. A clustering algorithm (e.g., ward-based clustering) then produces the dendrogram trees. Each square in the heatmap dendrogram represents the abundance level of a single category in a single sample. The values used to generate the heatmap/dendrogram figure can be downloaded as a table by clicking on the download button.

Ordination¶

MG-RAST uses Principle Coordinate Analysis (PCoA) to reduce the dimensionality of comparisons of multiple samples that consider functional or taxonomic annotations. Dimensionality reduction is a process that allows the complex variation found in a large datasets (e.g., the abundance values of thousands of functional roles or annotated species across dozens of metagenomic samples) to be reduced to a much smaller number of variables that can be visualized as simple two- or three-dimensional scatter plots. The plots enable interpretation of the multidimensional data in a human-friendly presentation. Samples that exhibit similar abundance profiles (taxonomic or functional) group together, whereas those that differ are found farther apart.

A key feature of PCoA-based analyses is that users can compare components not just to each other but to metadata recorded variables (e.g., sample pH, biome, DNA extraction protocol) to reveal correlations between extracted variation and metadata-defined characteristics of the samples. It is also possible to couple PCoA with higher-resolution statistical methods in order to identify individual sample features (taxa or functions) that drive correlations observed in PCoA visualizations. This coupling can be accomplished with permutation-based statistics applied directly to the data before calculation of distance measures used to produce PCoAs; alternatively, one can apply conventional statistical approaches (e.g., ANOVA or Kruskal-Wallis test) to groups observed in PCoA-based visualizations.

Table¶

The table tool creates a spreadsheet-based abundance table that can be searched and restricted by the user. Tables can be generated at user-selected levels of phylogenetic or functional resolution. Table data can be visualized by using Krona (Ondov, Bergman, and Phillippy 2011) or can be exported in BIOM(McDonald et al. 2012) format to be used in other tools (e.g., QIIME (Caporaso et al. 2010)). The tables also can be exported as tab-separated text.

Abundance tables serve as the basis for all comparative analysis tools in MG-RAST, from PCoA to heatmap/dendrograms.

Consider the following example showing how to use the taxonomic information derived from an analysis of protein similarities found for the data set 4447970.3. We use the best hit classification, SEED database, \(10^{-5}\) evalue, 60% identity, and a minimal alignment length of 15 amino acids. We select table output. The results are shown in Figure 5.23.

The following control elements are connected to the table:

- group by – allows summarizing entries below the level chosen here to be subsumed.

- download table – downloads the entire table as a spreadsheet.

- Krona – invokes KRONA (Ondov, Bergman, and Phillippy 2011) with the table data.

- QIIME – creates a BIOM(McDonald et al. 2012) format file with the data being displayed in the table.

- table size – changes the number of elements to display for the web page.

Below we explain the columns of the table and the functions available for them. For each column we allow sorting the table by clicking on the upward- and downward-pointing triangles.

metagenome

In the case of multiple datasets being displayed, this column allows sorting by metagenome ID or selection of a single metagenome.

source

This displays the annotation source for the data being displayed.

domain

The domain column allows subselecting from Archaea, Bacteria, Eukarya, and Viruses.

phylum, class

Since we have selected to group results at the class level, only phylum and class are being displayed. The text fields in the column headers allow subsection (e.g., by entering Acidobacteria or Actinobacteria in the phylum field). The searches are performed inside the web browser and are efficient.

Any subselection will narrow down all datasets being displayed in the table.

Users can elect to have the results grouped by other taxonomy levels (e.g., genus), creating more columns in the table view.

abundance

This indicates the number of sequences found with the parameters selected matching this taxonomic unit. (Note that the parameters chosen are displayed on top of the table.) Clicking on the abundance displays another page displaying the BLAT alignments underlying the assignments.

The abundance is calculated by multiplying the actual number of database hits found for the clusters by the number of cluster members.

avg. evalue, avg percent identity, average alignment length

These indicate the average values for E value, percent identity, and alignment length.

hits

This is the number of clusters found for this entity (function or taxon) in the metagenome.

…

This option allows extending the table to display (or hide) additional columns.

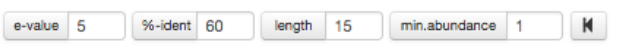

The parameter widget¶

Evalue, percent identity, length and minimum abundance filters¶

As shown in Figure 5.25 MG-RAST can changed the parameters for annotation transfer at analysis time. As each data and each analysis is different, we cannot provide a default parameter set for transferring annotations from the sequence databases to the features predicted for the environmental sequence data.

Instead we provide a tool that puts the user at the helm, providing the means to filter the sequences down by selecting only those matching certain criteria.

*Source type and level filters¶

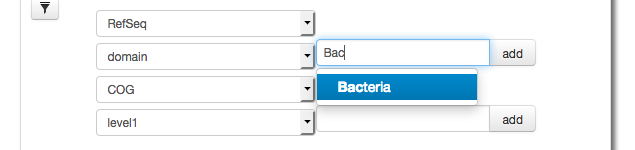

Adding one or more filters will limit the scope of the sequences analyzed to e.g. a the domain Bacteria (see Figure 5.26). We note that multiple filters can be used and they can be individually erased when no longer needed. Thus the user can filter, e.g. a certain phylum and the identify reads associated with a specific functional gene group.

*Example: Display abundance for functional category filtered by taxonomic entities¶

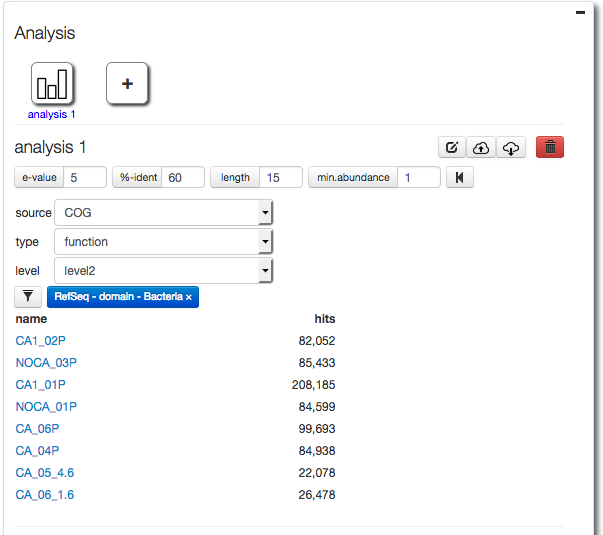

A key feature of the version 4.0 web interface is the ability to filter results. Here we demonstrate filtering results down to the domain Bacteria (Figure 5.27). After the filtering we select COG functional annotations using COG level 2 (Figure 5.28).

The parameter widget allows creation of a Filter for taxonomic units, in this case we use RefSeq annotation to filter at the domain level for Bacteria.

After creating a filter for Bacteria only (using RefSeq taxonomic annotations) we select COG functional annotations using COG level 2.

COG level 2 abundance filtered for Bacteria. The results for the settings shown in Figure 5.28

Viewing Evidence¶

For individual proteins, the MG-RAST page allows users to retrieve the sequence alignments underlying the annotation transfers (see Figure 5.30). Using the M5nr (Wilke et al. 2012) technology, users can retrieve alignments against the database of interest with no additional overhead.

Standard operating procedures SOPs for MG-RAST¶

SOP - Metagenome submission, publication and submission to INSDC via MG-RAST¶

MG-RAST can be used to host data for public access. There are three interfaces for uploading and publishing data, the Web interface, intended for most users, command line scripts, intended for programmers, and the native RESTful API, recommended for experienced programmers.

When data is published in MG-RAST, it can also be released to the INSDC databases. This tutorial covers both use cases.

We note that MG-RAST provides temporary IDs and permanent public identifiers. The permanent identifiers are assigned at the time data is made public. Permanent MG-RAST identifiers begin with “mgm” (e.g. “mgm4449249.3”) for data sets and mgp (e.g.”mgp128”) for projects/studies.

The following data types are supported:

- Shotgun metagenomes (“raw” and assembled)

- Metatranscriptome data (“raw” and assembled)

- Ribosomal amplicon data (16s, 18s, ITS amplicons)

- Metabarcoding data (e.g. cytochrome C amplicons; basically all non ribosomal amplicons)

PLEASE NOTE: We strongly prefer raw data over assembled data, if you submit assembled data, please submit the raw reads in parallel. If you perform local optimization e.g. adapter removal or quality clipping, please submit the raw data as well.

This document is intended for experienced to very experienced users and programmers. We recommend that most users not use the RESTful API. There is also a document describing data publication and INSDC submission via the web UI.

An access token for the MG-RAST API, this can be obtained from the MG-RAST web page (http://mg-rast.org) in the user section.

You will need a working python interpreter and the command line scripts and example data can be found in https://github.com/MG-RAST/MG-RAST-Tools:

Scripts: MG-RAST-Tools/tools/bin Data: MG-RAST-Tools/examples/sop/data

Change into MG-RAST-Tools/examples/sop/data and call:

sh get_test_data.sh

to add additional example data.

Either download the repository as a zipped archive from https://github.com/MG-RAST/MG-RAST-Tools/archive/master.zip or use the git command line tool:

git clone http://github.com/MG-RAST/MG-RAST-Tools.git

We tested up to the following parameters:

- max. size per file: 10GB

- max. project size: 200 metagenomes

While there is no reason to assume the software will not work with larger numbers of files or larger files, we did not test for that.

SOP:¶

Upload and submit sequence data and metadata to MG-RAST using the command mg-submit.py Note: This is an asynchronous process that may take some time depending on the size and number of datasets. (Note: We recommend that novice users try the web frontend; the cmd-line is primarily intended for programmers) The metadata in this example is in Microsoft Excel format, there is also an option of using JSON formatted data. Please note: We have observed multiple problems with spreadsheets that were converted from older version of Excel or “compatible” tools e.g. OpenOffice.

Example:

mg-submit.py submit simple .... --metadata

Verify the results and obtain a temporary identifier E.g. by using the WebUI at http://mg-rast.org – you can also use that to publish the data and trigger submission to INSDC.

Publish your project in MG-RAST and obtain a stable and public MG-RAST project identifier

Note: once the data is made public the data is read only, but metadata can be improved

Example:

mg-project make-public $temporary_ID

Trigger release to INSDC/ submit to EBI

Note: Metadata updates are automatically synced with INSDC databases within 48 hours.

Example:

mg-project submit-ebi $PROJECT_ID

Check status of release to INSDC/ submission to EBI

Note: This is an asynchronous process that may take some time depending on the size and number of datasets.

Example:

mg-project status-ebi $PROJECT_ID

We include a sample submission below:

From within the MG-RAST-Tool repository directory

# Retrieve repository and setup environment

git clone http://github.com/MG-RAST/MG-RAST-Tools.git

cd MG-RAST-Tools

# Path to scripts for this example

PATH=$PATH:`pwd`/tools/bin

# set environment variables

source set_env.sh

# Set credentials, obtain token from your user preferences in the UI

mg-submit.py login --token

# Create metadata spreadsheet. Make sure you map your samples to your

# sequence files

# Upload metagenomes and metadata to MG-RAST

mg-submit.py submit simple \

examples/sop/data/sample_1.fasta.gz \

examples/sop/data/sample_2.fasta.gz \

--metadata examples/sop/data/metadata.xlsx

# Output

> Temp Project ID: ed2102aa666d676d343735323836382e33

> Submission ID: 77a1a1a5-4cbd-4673-86bf-f87c9096c3e1

# Remember IDs for later use

SUBMISSION_ID=77a1a1a5-4cbd-4673-86bf-f87c9096c3e1

TEMP_ID=mgp128

# Check if project is finished

mg-submit.py status $SUBMISSION_ID

# Output

> Submission: 77a1a1a5-4cbd-4673-86bf-f87c9096c3e1 Status: in-progress

# Make project public in MG-RAST

mg-project.py make-public $TEMP_ID

# Output

> # Your project is public.

> Project ID: mgp128

> URL: https://mg-rast.org/linkin.cgi?project=mgp128

PROJECT_ID=mgp128

# Release project to INSDC archives

mg-project.py submit-ebi $PROJECT_ID

# Output

> # Your Project mgp128 has been submitted

> Submission ID: 0cf7d811-1d43-4554-ab97-3cb1f5ceb6aa

# Check if project is finished

mg-project.py status-ebi $PROJECT_ID

# Output

> Completed

> ENA Study Accession: ERP104408

REST API uploader¶

The following upload instructions are for using the MG-RAST REST API with the curl program. In order to operate the API the user has to authenticate with an MG-RAST token. The token can be retrieved from the “Account Management” –\(>\) “Manage personal preferences” –\(>\) “Web Services” –\(>\) “authentication key” page via MG-RAST Web site.

We strongly suggest that you use the scripts we provide, instead of the native REST API.

You can upload a file into your inbox with

curl -X POST -H "auth: <myToken>" -F "upload=@<path_to_file>/<file_name>" "https://api.mg-rast.org/inbox"

If you have a compressed file to upload, supports gzip or bzip2

curl -X POST -H "auth: <myToken>" -F "upload=@<path_to_file>/<gzip_file>" -F "compression=gzip" "https://api.mg-rast.org/inbox" curl -X POST -H "auth: <myToken>" -F "upload=@<path_to_file>/<gzip_file>" -F "compression=bzip2" "https://api.mg-rast.org/inbox"

If you have an archive file containing multiple files to upload do the following two steps, supports: .zip, .tar, .tar.gz, .tar.bz2

1. curl -X POST -H "auth: <myToken>" -F "upload=@<path_to_file>/<archive_file>" "https://api.mg-rast.org/inbox" 2. curl -X POST -H "auth: <myToken>" -F "format=<one of: zip, tar, tar.gz, tar.bz2>" "https://api.mg-rast.org/inbox/unpack/<uploaded_file_id>"

Generating metadata for the submission¶

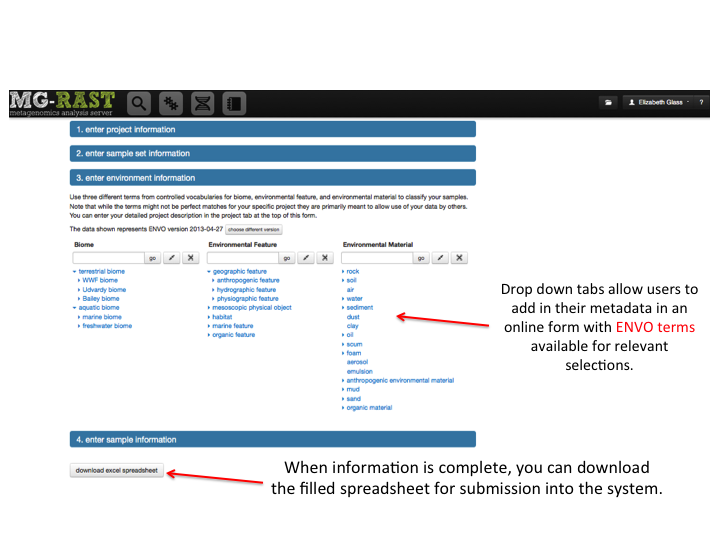

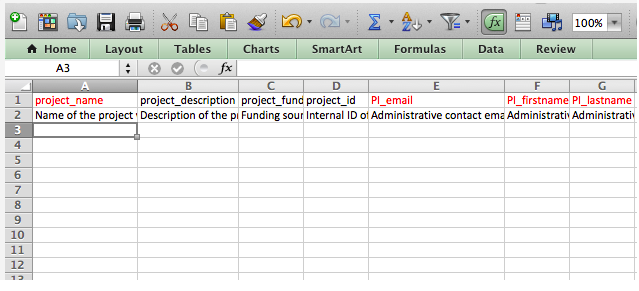

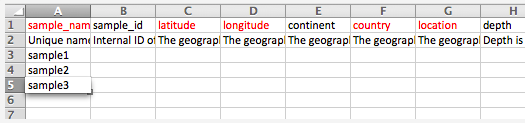

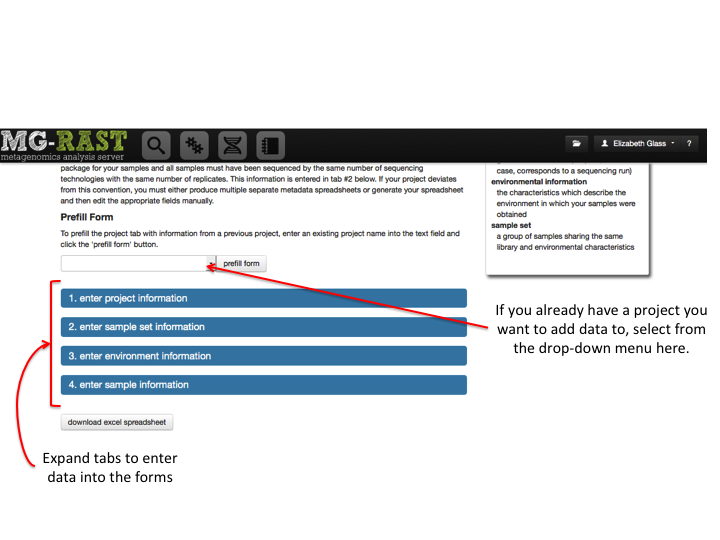

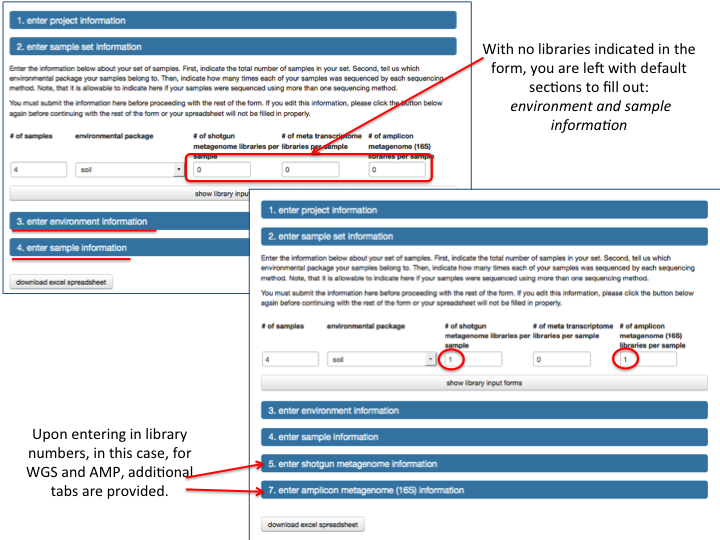

MG-RAST uses questionnaires to capture metadata for each project with one or more samples. Users have two options, they can download and fill out the questionnaire and then submit it or use our online editor, MetaZen. Questionnaires are validated automatically by MG-RAST for completeness and compliance with the controlled vocabularies for certain fields.